A Simple Guide on A/B Testing for Data Science

Overview

A/B testing is a most widespread way to test your products and is gaining steam in the data science field. To implement it data scientists are at the forefront.

What is A/B testing?

A/B testing is a test on two variants to check which performs better based on a given conditions. Naturally, two consumer groups are presented to two distinct versions of the same thing to check if there is a hugecontrast in metrics like sessions, click-through rate, and/or conversions.

It’s vital to note that all other variables should be held constant when performing an A/B test.

Getting more specialized, A/B testing is a type of statistical and two-sample hypothesis testing. A technique wherein a sample dataset is compared against the populace information is called as Statistical hypothesis testing. A technique in deciding if the contrasts between the two examples are measurably critical or not is called as Two-sample hypothesis testing

How does A/B Testing Work?

Here let us understand an example of logic and methodology behind the concept of A/B testing.

Let’s us consider there is an e-commerce company named ABC where it wants to make some changes in its newsletter format to increase the traffic on its website. Itpics the original newsletter and marks it A and makes some changes in the language of A and calls it B. Both newsletters are then the same in color, headlines, and format.

The main objective here is to check which newsletter welcomes higher traffic on the website i.e the change rate. We will utilise A/B testing and gather data to analyze which newsletter performs better.

1. Make a Hypothesis

We have to make two hypotheses in hypothesis testing. i.e Null hypothesis and alternative hypothesis. Let’s have a look at both.

a. Null hypothesis or H0:

The null hypothesis is the one that expresses that sample observations result purely from possibility. From an A/B test viewpoint, the null hypothesis states that there is no difference between the control and variant groups. Here our H0 is ” there is no difference in the change rate in customers receiving newsletter A and B”.

b. Alternative Hypothesis or H0:

The alternative hypothesis challenges the null hypothesis and is basically a hypothesis that the researcher believes to be true. The alternative hypothesis is the thing that you might hope that your A/B test will prove to be valid. In our example, the Ha is- “the conversion rate of newsletter B is higher than those who receive newsletter A“.

Now, we need to collect sufficient evidence through our tests to reject the null hypothesis.

2. Create Control Group and Test Group

The next step is to choose the group of customers that will participate in the test. Here we have two groups – The Control group, and the Test (variant) group.

The Control Group is the one that will receive newsletter A and the Test Group is the one that will receive newsletter B.

For this trial, we randomly select 1000 customers – 500 each for our Control group and Test group.

Random sampling is a technique where each sample in a population has an equivalent chance of being picked. Random sampling is important in hypothesis testing since it eliminates sampling bias, and it’s important to wipe out bias because you need the results of your A/B test to be representative of the entire population relatively than the sample itself.

Another important aspect ideal with is the Sample size. It is necessitated that we determine the minimum sample size for our A/B test prior to conducting it so that we can remove under coverage bias. It is the bias from sampling too barely any observations.

3. Conduct the test, compare the results, and reject or do not reject the null hypothesis

Once you conduct your experiment and gather your data, you need to determine if the difference between your control group and variant group is statistically significant. There are a couple of steps in determining this:

- First, you want to set your alpha, the probability of making a type 1 error. Typically the alpha is set at 5% or 0.05

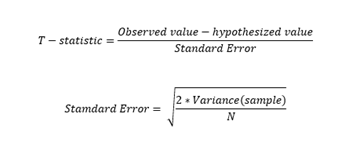

- Next, you need to determine the probability value (p-value) by initially calculating the t-statistic using the formula above.

- Lastly, compare the p-value to the alpha. Do not reject the null!If the p-value is greater than the alpha.

When Should We Use A/B Testing?

A/B testing works better when testing incremental changes, such as UX changes, new features, ranking, and page load times. Here you may compare pre and post-modification results to decide whether the changes are working as desired or not.

A/B testing doesn’t function well when testing major changes, like new products, new branding, or completely new user encounters. In these cases, there may be effects that drive higher than normal engagement or emotional responses that may cause users to behave in a different manner.

A/B testing is at any rate a 100-year-old statistical methodology yet in its present form, it comes in the 1990s. Now it has become more eminent with the online environment and availability for big data. It is easier for organizations to conduct the test and utilize the outcomes for better user experience and execution.

There are numerous tools available for conducting A/B testing yet being a data scientist you must comprehend the factors working behind it. Likewise, you must be aware of the statistics so as to validate the test and prove it’s statistical significance.