Deep Dive into Bidirectional LSTM

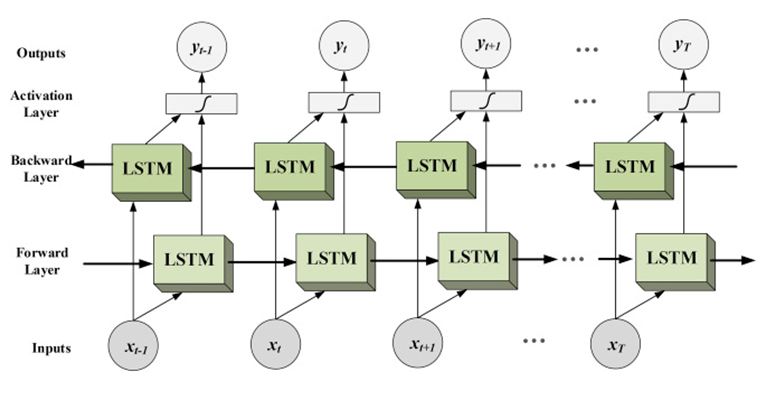

Bidirectional LSTMs are an extension to typical LSTMs that can enhance performance of the model on sequence classification problems. Where all time steps of the input sequence are available, Bi-LSTMs train two LSTMs instead of one LSTMs on the input sequence. The first on the input sequence as-is and the other on a reversed copy of the input sequence. By this additional context is added to network and results are faster.

Bidirectional LSTMs

The idea behind Bidirectional Recurrent Neural Networks (RNNs) is very straightforward. Which involves replicating the first recurrent layer in the network then providing the input sequence as it is as input to the first layer and providing a reversed copy of the input sequence to the replicated layer. This overcomes the limitations of a traditional RNN.Bidirectional recurrent neural network (BRNN) can be trained using all available input info in the past and future of a particular time-step.Split of state neurons in regular RNN is responsible for the forward states (positive time direction) and a part for the backward states (negative time direction).

In the speech recognition domain the context of the whole utterance is used to interpret what is being said rather than a linear interpretation thus the input sequence is feeded bi-directionally. To be precise, time steps in the input sequence are processed one at a time, but the network steps through the sequence in both directions same time.

Bidirectional LSTMs in Keras

Bidirectional layer wrapper provides the implementation of Bidirectional LSTMs in Keras

It takes a recurrent layer (first LSTM layer) as an argument and you can also specify the merge mode, that describes how forward and backward outputs should be merged before being passed on to the coming layer. The options are:

– ‘sum‘: The results are added together.

– ‘mul‘: The results are multiplied together.

– ‘concat‘(the default): The results are concatenated together ,providing double the number of outputs to the next layer.

– ‘ave‘: The average of the results is taken.

Sequence Classification Problem

We will work with a simple sequence classification problem to explore bidirectional LSTMs.The problem is defined as a sequence of random values ranges between 0 to 1. This sequence is taken as input for the problem with each number per timestep.

0 or 1 is associated with every input.Output value will be 0 for all. Once the cumulative sum of the input sequence exceeds a threshold of 1/4, then the output value will switch to 1.

For example:

we ill take a sequence of 10 input timesteps: # create a input sequence of random numbers ranges between [0,1] X = array([random() for _ in range(10)])

[ 0.22228819 0.26882207 0.069623 0.91477783 0.02095862 0.71322527

0.90159654 0.65000306 0.88845226 0.4037031 ]

The corresponding classification output (y) would be: 0 0 0 0 0 1 1 1 1 1 # calculate threshold value to change class values limit = 10/4.0

Cumulative sum for the input sequence can be calculated using python pre-build cumsum() function

# computes the outcome for each item in cumulative sequence

Outcome= [0 if x < limit else 1 for x in cumsum(X)]

y=array (outcome)

The function below takes the input as the length of the sequence, and returns the X and y components of a new problem statement.

from random import random from numpy import array from numpy import cumsum # create a sequence classification instance def get_sequence(n_timesteps): # create a sequence of random numbers ranges between [0,1] X = array([random() for _ in range(n_timesteps)]) # calculate threshold value to change class values limit = n_timesteps/4.0 # determine outcome(class) for each item in cumulative sequence

Outcome= [0 if x < limit else 1 for x in cumsum(X)]

y=array (outcome)

return X, y X, y = get_sequence(10) print(X) print(y)

[ 0.22228819 0.26882207 0.069623 0.91477783 0.02095862 0.71322527

0.90159654 0.65000306 0.88845226 0.4037031 ]

[0 0 0 0 0 0 1 1 1 1]

Bidirectional LSTM for Sequence Classification

We can implement this by wrapping the LSTM hidden layer with a Bidirectional layer, as follows:

model.add(Bidirectional(LSTM(20, return_sequences=True), input_shape=(n_timesteps, 1)))

This will create two copies one fit in the input sequences as-is and one on a reversed copy of the input sequence. By default, concatenation operation is performed for the result values from these LSTMs. That implies that instead of the Time Distributed layer receiving 10 time steps of 20 outputs, it will now receive 10 time steps of 40 (20 units + 20 units) outputs.

from random import random from numpy import array from numpy import cumsum from keras.models import Sequential from keras.layers import LSTM from keras.layers import Dense from keras.layers import TimeDistributed from keras.layers import Bidirectional # create a sequence classification instance def get_sequence(n_timesteps): # create a sequence of random numbers ranges between [0,1] X = array([random() for _ in range(n_timesteps)]) # calculate cut-off value to change class values limit = n_timesteps/4.0 # compute the class outcome for every item in cumulative sequence

Outcome= [0 if x < limit else 1 for x in cumsum(X)]

y=array (outcome)

# reshape input and output data to be suitable for LSTMs

X = X.reshape(1, n_timesteps, 1)

y = y.reshape(1, n_timesteps, 1)

return X, y

# define problem properties

n_timesteps = 10

# define LSTM

model = Sequential()

model.add(Bidirectional(LSTM(20, return_sequences=True), input_shape=(n_timesteps, 1)))

model.add(TimeDistributed(Dense(1, activation='sigmoid')))

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['acc'])

# train LSTM

for epoch in range(1000):

# generate new random sequence

X,y = get_sequence(n_timesteps)

model.fit(X, y, epochs=1, batch_size=1, verbose=2)

# evaluate LSTM

X,y = get_sequence(n_timesteps)

yhat = model.predict_classes(X, verbose=0)

for i in range(n_timesteps):

print('Expected:', y[0, i], 'Predicted', yhat[0, i])Epoch 1/1

0s – loss: 0.0967 – acc: 0.9000

Epoch 1/1

0s – loss: 0.0865 – acc: 1.0000

Epoch 1/1

0s – loss: 0.0905 – acc: 0.9000

Epoch 1/1

0s – loss: 0.2460 – acc: 0.9000

Epoch 1/1

0s – loss: 0.1458 – acc: 0.9000

Expected: [0] Predicted [0]

Expected: [0] Predicted [0]

Expected: [0] Predicted [0]

Expected: [0] Predicted [0]

Expected: [0] Predicted [0]

Expected: [1] Predicted [1]

Expected: [1] Predicted [1]

Expected: [1] Predicted [1]

Expected: [1] Predicted [1]

Expected: [1] Predicted [1]

Summary:

This is how we develop Bidirectional LSTMs for sequence classification in Python with Keras. The main purpose is Bidirectional LSTMs allows the LSTM to learn the problem faster.