Top Most Machine Learning Algorithms

Machine Learning algorithms are very powerful techniques of a complex Machine Learning models. These algorithms are very core foundation when it’s come to training the model.

In this article we are going to learn most used machine Learning algorithms and their applications.Before getting deeper lets have a look on brief introduction. Basically the machine Learning algorithms are classified into 3 categories they are supervised , unsupervised and Reinforcement Learning.

In supervised Learning the machine is taught by an example here the dataset having the desired inputs and output variables by using these set of variables we using a function to map the inputs to outputs.It further classified into Regression and classification like Random forest , KNN , Logistic and SVM.

Coming to unsupervised learning we don’t have the output variable only we are having input variable. And these are used unlabeled training data to underlying structure of the data. K-means and apriori most useful algorithms.

Now, the Reinforcement Learning is one type of machine learning algorithm which helps to decide the next best action based on present state.These algorithms are mainly used in robotics and video games.

Linear Regression:

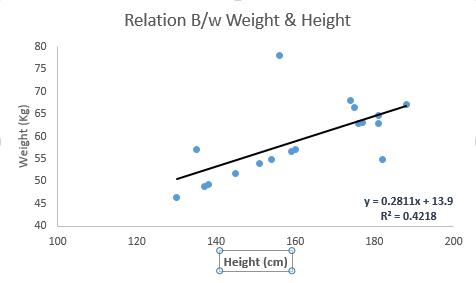

Whenever you want to do the predictive modelling the people will pick this one , in this the independent variable is continuous or discrete and the dependent variable is continuous.

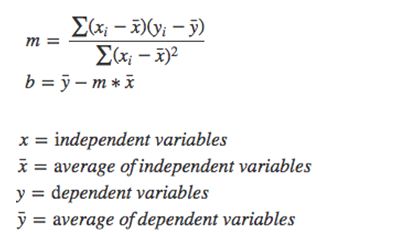

The Linear regression establish the relationship between dependent and one or more independent variables using a regression line.it can be represented as y=mx+c here m is slope and c is the error term.

Here we are having only one independent variable so it is called Linear regression if you have more then one independent variables it is called multiple linear regressions. But the multiple linear regression suffers from the multicolinearity , heteroskedasticity and auto correlation.

The most common method is used to fit the best-fit line by using Least-Square-method by minimizing the error.

Logistic Regression:

Logistic Regression is a type of machine learning algorithm and it is mainly used for binary classification problems to find the probability of an event occurring this the output lies in the range of 0 and 1.for this we are using the sigmoid function.

It is very easy to understand and the sigmoid function is in the form of

F(x) = 1/1+exp(-x)

And the range is in between the 0 and 1. It takes a real values as inputs and give the output values in 0 and 1. And it is a monotonic and continuously differential and has a fixed output range. The main disadvantage of the sigmoid function is vanishing gradients and its not a zero centered and the gradient updates are far in different directions. It makes the optimization hard.

Once you can observe the below graph, it is a S-shaped curve that gets closer to 1 as the value of input variable increases above 0 and gets closer to 0 as the input variable decreases below 0. If it is 0.5 when the input variable is 0.

Then we can fix a threshold and the output is more then the threshold value we can classify the outcome is possitive or 1 and its less then the threshold then we can classify it’s a negitive or 0.

If you want more description about the Activation functions , go through this link.

https://www.i2tutorials.com/technology/activation-functions-in-deep-learning/.

Naive Bayes:

The Naïve Bayes algorithm is mainly based on Bayes theorem and the assumption is there is no dependency between the predictors which means each and every input variable is independent .This assumption is very effective on large range of complex problems.

It is used to find the conditional probability , which means it’s a probability of an event occur it has some relationship to one or more events.it is mainly dealing with the probability distributions of the variables to predict the probability of target variable belonging to particular value.

In this we can find out the two types of probabilities are 1. probability of each class and 2.conditonal probability of each class given each input values.

The Bayes theorem stated as

where:

1. P(c|x) = posterior probability. The probability of hypothesis h being true, given the data d, where P(c|x)= P(x1| c) P(x2| c)….P(xn| c) P(d)

2. P(x|c) = Likelihood. The probability of data d given that the hypothesis h was true.

3. P(c) = Class prior probability. The probability of hypothesis h is true

4. P(x) = Predictor prior probability. The probability of the data (irrespective of the hypothesis

After calculating the posterior probability we can find the probabilities of each hypothesis then you can select the highest probability hypothesis.

These are very easy to build and work on large datasets and works on very complex problems.this is mainly used in fac_recognition , sentiment analysis etc..

Decision Tree:

Decision tree is a graphical representation of possible solutions to make a decisions on some conditions. It’s a tree-like flow-chart structure to represent decisions and estimate the every possible outcome.in this we are mainly focussing on two entities they are nodes and leaves. The nodes are represent the different decisions and the leaves represent the final outcome and thers is no chance to split.and the decesion tree is used for both regression and classification tasks. For ex,[from wikipedia]

The decision tree is a supervised learning algorithm and mostly used for classification problems. And having two types of decision trees are classification trees and regression trees. In classification trees the nodes represents the decisions and leaves represents the discrete values or labels.these are mainly used in credit risk scoring and financial ang banking services.

K-Nearest Neighbors:

The KNN is a very simple , lazziest , non-parametric and most effective algorithm because there is no explicit training phase for the before classification.

The advantage behind this algorithm is it is use a database in which the data points are separated into classes and to predict the which class it is. In this the total dataset will be used for training set or used for training and testing and it requires a lot of memory to store all the data and it is not suited for large datasets.

In the above diagram the knn dictates the new data point belonging to the red group(Category 1). Simply we can say that the K-nearest-neighbors algorithm estimates the new data point to be a member of one group or another group based on which group data points are nearer to that new data point.

The applications of KNN is whenever you are looking for similar items it is mostly used that’s why it is called as KNN’s search.

Support Vector Machines:

Support Vector Machine is one type of supervised Machine Learning algorithm and it is widely used in classification problems especially multi-class classification.

The main objective of the support vector machines is to find the hyperplane in N-dimensional space that distinctly classifies the datapoints.

In this support vector machine algorithm we plot each data item as a datapoint in n-dimensional space with the value of every feature being the value of a particular coordinates. that’s why we can easily done the classification by fing the hyperplane. Once observe the below snapshot.

You can visualize the this is a line in two dimensions and let’s assume the input points are completely separated by this line. The svm is mainly find the coefficients which leads the best separation of classes by the hyperplane.

The main advantage of this algorithm is it is one of the more accurate logarithm when we are having the lesser ans cleaned dataset and also it is more efficient.

The Support Vector Machine algorithms are mainly used in face detection , text categorization , hyper text categorization, classification of images and bioinformatics.

Apriori:

The apriori algorithm is a unsupervised machine learning learning algorithm which is used to sort the information into categories. This sorted information is very much helpful in data management process.This is the most popular algorithm used in market-basket analysis where the customer frequently buy the combination of products that co occur in database. from given dataset the apriori algorithm will generate the associated rules and works with bottom-up approach where frequently used subsets are extended at a time if not the algorithm will terminate.

The apriori is mainly works on the two principles one is an item set occurs frequently than all the subset items are also occurs frequently and the another one is if the item set is occur infrequently then the super set items are also occurs infrequently.

In association rules if a person buy an item a and he is also buy an item b as a->b.

For example:

A person buy a bread , milk and he also buys a jam then this could be written in the form of association rule is {bread , milk} -> {jam} . These rules are generated by crossing a

threshold of support and confidence. Here the support measures the no.of candidate item set consider during frequent item set generation.

The apriori algorithm is mainly used in market basket analysis , customer analytics , product clustering.

Boosting with AdaBoost :

Boosting with Adaboost algorithm are the boosting algorithms and these mainly used when we are having the large amount of dataset which is used to done the predictions with high accuracy. It is a most flexible and powerful algorithm and interrupted easily with some tricks.

It is a ensemble technique which Is used tp create strong classifier based on no.of weak classifiers.

By using this algorithm we build a model from training data after that create a second model to correct the errors of the first model. we create the models up to the training ser predicted perfectly. In simply we can say that it is used to combine the multiple weak learners or average predictors to build a strong predictor. Boosting is the very first successful algorithm for the binary classification. These are used where we have the plenty of data to make the predictions and it is also used for reduce the bias and variance.

Random Forest:

Random forest is a supervised machine learning algorithm and it is very easy , powerful and more flexible.it is also a ensemble algorithm called Bagging or bootStrap Aggregation. The random forest can also be used for both regression and classification.

As the name shows the algorithm create a forest and make it as somehow random.the basic concept behind the bagging is combination of learning models which increase the overall result.

Here we are going to create no.of trees randomly with replacement. This sample will be the growing of tree. For ex , if you classifying an object based on features each tree gives the classification and go through the majority votes to choose the particular class. In this random forest there is no pruning and the over fitting is never come when we are doing the classification problems.

The random forest algorithm is mostly used in many applications such as Banking , Medicines , E-commerce and Stock market.