Explain about Adam Optimization Function?

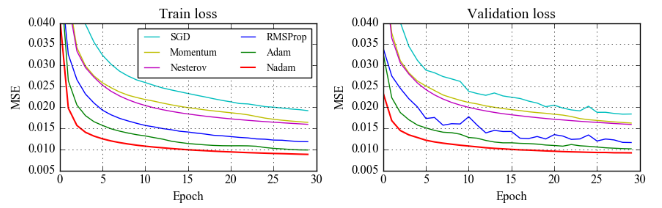

Ans: Adam can be looked at as a combination of RMSprop and Stochastic Gradient Descent with momentum. It uses the squared gradients to scale the learning rate like RMSprop and it takes advantage of momentum by using moving average of the gradient instead of gradient itself like SGD with momentum. Adam is an adaptive learning rate method, which means, it computes individual learning rates for different parameters.

Adam uses estimations of first and second moments of gradient to adapt the learning rate for each weight of the neural network. N-th moment of a random variable is defined as the expected value of that variable to the power of n.

The first moment is mean, and the second moment is uncentered variance to estimates the moments, Adam utilizes exponentially moving averages, computed on the gradient evaluated on a current mini-batch. Where m and v are moving averages, g is gradient on current mini-batch, and betas — new introduced hyper-parameters of the algorithm.

The vectors of moving averages are initialized with zeros at the first iteration. Expected values of the estimators should equal the parameter we’re trying to estimate, as it happens, the parameter in our case is also the expected value. If these properties held true, that would mean, that we have unbiased estimators.

These do not hold true for our moving averages. Because we initialize averages with zeros, the estimators are biased towards zero. Actual step size taken by the Adam in each iteration is approximately bounded the step size hyper-parameter. This property adds intuitive understanding to previous unintuitive learning rate hyper-parameter.

Step size of Adam update rule is invariant to the magnitude of the gradient, which helps a lot when going through areas with tiny gradients (such as saddle points or ravines). In these areas SGD struggles to quickly navigate through them.

Adam was designed to combine the advantages of Adagrad, which works well with sparse gradients, and RMSprop, which works well in on-line settings. Having both of these enables us to use Adam for broader range of tasks. Adam can also be looked at as the combination of RMSprop and SGD with momentum.