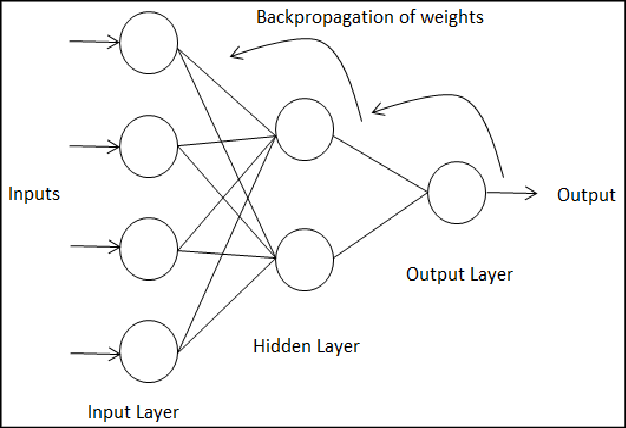

Explain Back Propagation in Neural Network

Ans: Back Propagation is one of the types of Neural Network. Here nodes are connected in loops that is output of a particular node is connected to the previous node in order to reduce error at the output. This loop may take number of iterations.

Back Propagation uses technique called Gradient Descent in order to find the minimum value of error function in weight space. The weight which has minimum error is considered as final solution to the learning problem.

Steps involved in Back Propagation are:

- Forward Propagation

- Back Propagation

- Calculating the updated weight value.

This Process keeps on repeating until the error is minimized.

Share: