Explain Step / Threshold and Leaky ReLU Activation Functions

(a) Step / Threshold Activation Function

Ans: Step Activation function is also called as Binary Step Function as it produces binary output which means only 0 and 1. In this Function we have Threshold value. Where the input is greater than Threshold value it will give output 1 otherwise it is 0. Hence it is also called as Threshold Activation Function. The limitation of this function is it does not allow multi value outputs which means it cannot classify the inputs into several output categories.

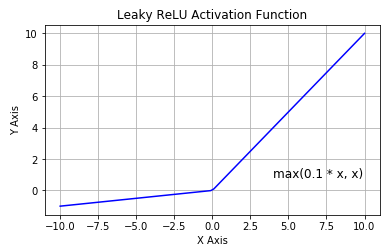

(b) Leaky ReLU Activation Function

Leaky ReLU is the extension to the ReLU Activation Function which overcomes the Dying problem of ReLU Function. Dying ReLU problem is becomes inactive for any input provided to it, due to which the performance of Neural Network gets effected. To rectify this problem, we have Leaky ReLU which has small negative slope for Negative input unlike ReLU Activation Function. It has a constant gradient of 0.01. The Limitation of Leaky ReLU is it has linear curve which cannot be used for complex classification.

R(z)={Z Z> 0

αZ Z<=0 }