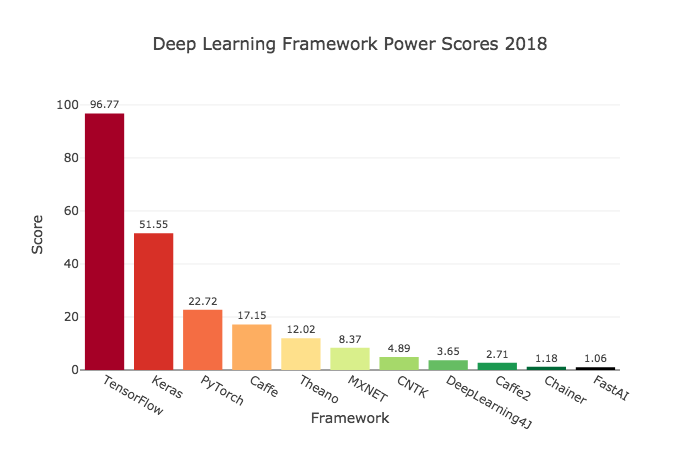

What are popular Deep Learning Libraries in the world?

Now a days Deep learning is evolving rapidly. The most popular Deep learning Libraries according to 2019 are

- TensorFlow

- PyTorch

- Sonnet

- Keras

- MXNet

- Gluon

- Swift

- Chainer

- DL4J

- OMNX

TensorFlow:

It’s handy for creating and experimenting with deep learning architectures, and its formulation is convenient for data integration such as inputting graphs, SQL tables, and images together. It is backed by Google which guarantees it will stay around for a while, hence it makes sense to invest time and resources to learn it.

PyTorch:

The process of training a neural network is simple and clear. At the same time, PyTorch supports the data parallelism and distributed learning model, and also contains many pre-trained models. PyTorch is much better suited for small projects and prototyping.

Sonnet:

The main advantage of Sonnet, is you can use it to reproduce the research demonstrated in DeepMind’s papers with greater ease than Keras, since DeepMind will be using Sonnet themselves.

Keras:

Keras is the best Deep Learning framework for those who are just starting out. It’s ideal for learning and prototyping simple concepts, to understand the very essence of the various models and processes of their learning.

Keras is a beautifully written API. The functional nature of the API helps you completely and gets out of your way for more exotic applications. Keras does not block access to lower level frameworks.

Keras results in a much more readable and succinct code.

Keras model Serialization/Deserialization APIs, callbacks, and data streaming using Python generators are very mature.

MXNet:

Support of multiple GPUs. Clean and easily maintainable code. Fast problem-solving ability.

Gluon:

In Gluon, you can define neural networks using the simple, clear, and concise code.

It brings together the training algorithm and neural network model, thus providing flexibility in the development process without sacrificing performance.

Gluon enables to define neural network models that are dynamic, meaning they can be built on the fly, with any structure, and using any of Python’s native control flow.

Swift:

A great choice if dynamic languages are not good for your tasks. If you have a problem arises when you have training running for hours and then your program encounters a type error and it all comes crashing down, enter Swift, a statically typed-language. Here you will know ahead of any line of code running that the types are correct.

Chainer:

By its own benchmarks, Chainer is notably faster than other Python-oriented frameworks, with TensorFlow the slowest of a test group that includes MxNet and CNTK.

Better GPU & GPU data center performance than TensorFlow. (TensorFlow is optimized for TPU architecture) Recently, Chainer became the world champion for GPU data center performance.

OOP like programming style.

DL4J:

A very good platform if you are looking for a good Deep Learning Framework in Java.

OMNX:

ONNX is a piece of good news for PyTorch developers.