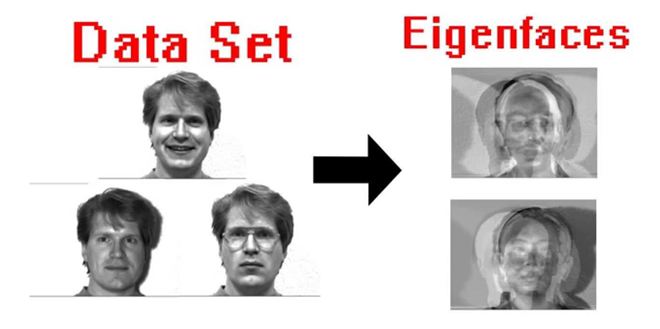

What do you mean by Eigen Faces and Explain in Detail?

Eigenface Method uses Principal component analysis to linearly project the image space to low dimensional feature space.

As correlation methods are computationally expensive and require great amounts of storage, it is natural to pursue dimensionality reduction schemes. A technique now commonly used for dimensionality reduction in computer vision particularly in face recognition is principal components analysis (PCA) [13, 22, 27, 28, 29]. PCA techniques, also known as Karhunen-Loeve methods, choose a dimensionality reducing linear projection that maximizes the scatter of all projected samples. More formally, let us consider a set of N sample images fx1; x2; xN g taking values in an n-dimensional feature space, and assume that each image belongs to one of c classes f1; 2; : : : ; cg.

Let us also consider a linear transformation mapping the original n-dimensional feature space into an m-dimensional feature space, where m < n. Denoting by W2IRnm a matrix with orthonormal columns, the new feature vectors yk2IRm are defined by the following linear transformation: yk = WT xk ; k = 1; 2; : : : ; N : Let the total scatter matrix ST be defined as ST = X N k=1 (xk )(xk ) T where 2 IRn is the mean image of all samples. Note that after applying the linear transformation, the scatter of the transformed feature vectors fy1; y2; : : : ; yN g is WT SW. In PCA, the optimal projection Wopt is chosen to maximize the determinant of the total scatter matrix of the projected samples.