What is Learning rate and how can it effect accuracy and performance in Neural Networks?

Ans: A neural network learns or approaches a function to best map inputs to outputs from examples in the training dataset.

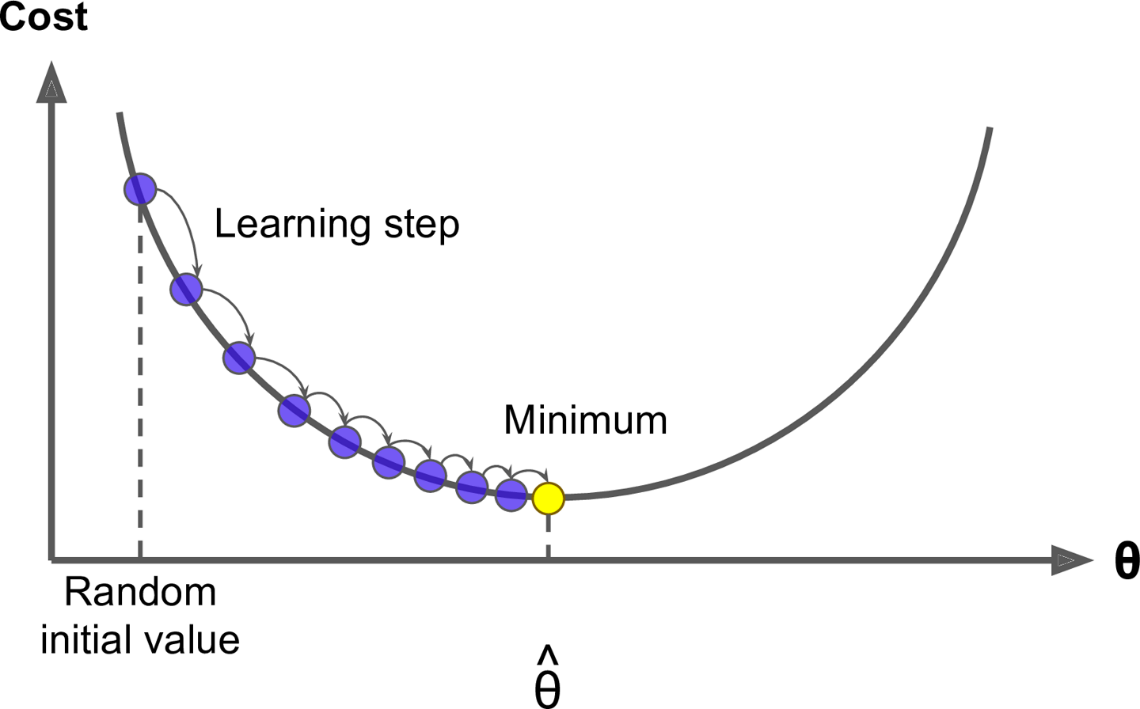

The learning rate hyperparameter controls the rate or speed at which the model learns. Precisely, it controls the amount of assigned error that the weights of the model are updated with each time they are updated.

Given a perfectly configured learning rate, the model will learn to best estimate the function given number of layers and the number of nodes per layer in a given number of training epochs.

Usually, a large learning rate allows the model to learn faster, at the cost of arriving on a sub-optimal final set of weights. A smaller learning rate may allow the model to learn a more optimal or even globally optimal set of weights but may take significantly longer time to train.

At extremes, a learning rate that is too large will result in weight updates that will be too large and the performance of the model will oscillate over training epochs. Oscillating performance is said to be caused by weights that diverge. A learning rate that is too small may never converge or may get stuck on a suboptimal solution.