Explain brief about Mini Batch Gradient Descent?

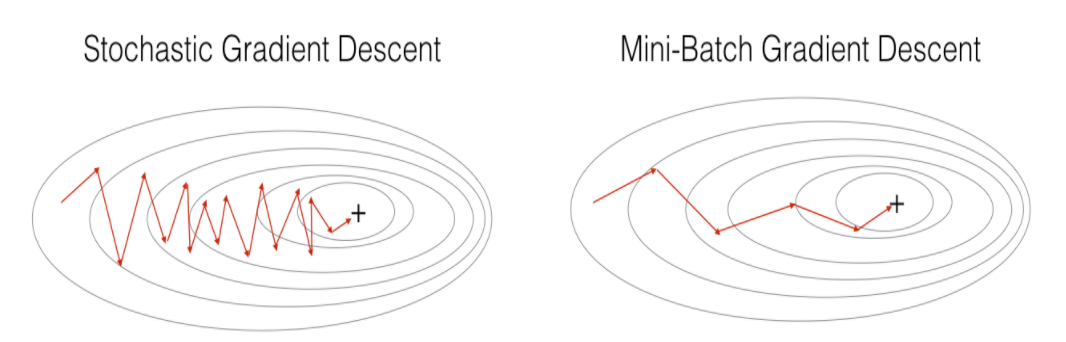

Ans: Mini-batch gradient descent is an extension of the gradient descent algorithm. Mini batch gradient descent splits the training dataset into small batches which are used to calculate model error and update model coefficients.

Implementations may choose to sum the gradient over the mini-batch which further reduces the variance of the gradient.

Mini-batch gradient descent attempts to find a balance between the robustness of stochastic gradient descent and the efficiency of batch gradient descent. It is the most effective implementation of gradient descent which is used in the field of deep learning.

Advantages

Mini batch gradient descent updates frequency which is higher than batch gradient descent which gives more robust convergence by avoiding local minima.

The mini batch gradient descent updates provide a computationally more efficient process than stochastic gradient descent.

Disadvantages

Mini batch gradient descent requires additional hyperparameter mini batch size for training the algorithm.