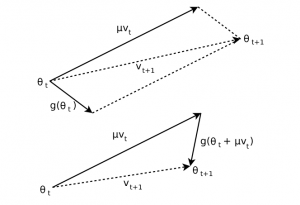

Ans: Generally, in Deep Neural Networks we train the noisy data. To reduce the effect of noise when the data is fed to the batches during optimization. This problem can be solved using exponentially weighted averages. In order to identify the trends in noisy data, we have to set the parameters algorithm is used.

This algorithm averages the value of the parameters over its value from the previous iterations. This averaging ensures that only the trend is retained and the noise is averaged out. This method is used as a tactic in momentum based gradient descent to make it robust against noise in data samples which results in faster training of deep Neural Networks.