Explain Multi collinearity in detail? How to reduce it?

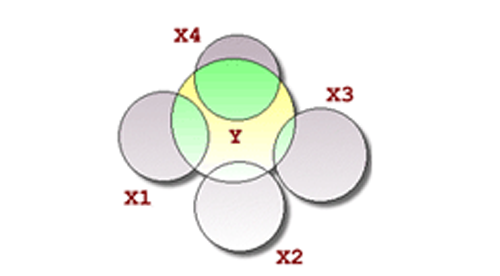

Multicollinearity is a phenomenon in which two or more predictor variables or Independent variables in a regression model are highly correlated, which means that one variable can be linearly predicted from the others with a considerable degree of accuracy. Two variables are perfectly collinear if there is an exact linear relationship between them.

There are two types of multicollinearity:

Structural multicollinearity is a mathematical artifact caused by creating new predictors from other predictors.

Data-based multicollinearity is a result of a poorly designed experiment, dependence on purely observational data, or the inability to operate the system on which the data are collected.

Ways to reduce Multicollinearity

Drop one of the variables. An explanatory variable may be dropped to produce a model with significant coefficients.

Standardize your independent variables.

Obtain more data. This is the preferred solution. More data can produce more precise parameter estimates, as seen from the formula in variance inflation factor for the variance of the estimate of a regression coefficient in terms of the sample size and the degree of multicollinearity.