Google Pushes New Small AI Chip For Object Machine Learning

Two years ago, Google launched its tensor processor ( Te NSOR Processing Units), ie TPUs. This chip can be used in the company’s data center, specializing in some artificial intelligence data processing.

But with the development of the Internet of Things and the demand for intelligent computing on the object side, Google is now moving its artificial intelligence computing experience down the cloud, launching a new Edge TPU (Edge Computing TPU). The Edge TPU is a small, artificial intelligence computing accelerator that enables IoT devices to handle machine learning tasks!

The method was end direct calculation known as edge computing (Edge Computing), so Google will control the class chip called the Edge TPUs. Was the end mean things terminal, that is, we usually say intelligent terminals, smart phones, smart smoke , smart Barabara hardware.

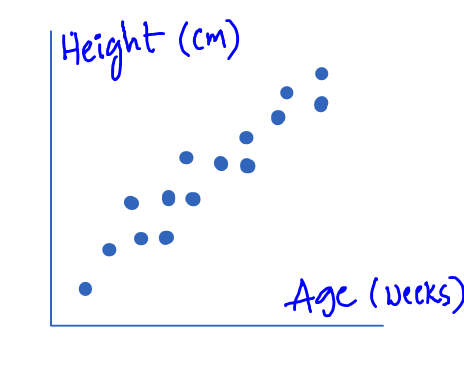

Google’s new EdgeTPU is placed on top of a one cent coin

The Edge TPU is primarily intended to handle machine learning tasks in the so-called “inference” class. “Inference” is the stage in which a trained model performs a task throughout the machine learning process, such as identifying an object from a picture. Google’s previous server-side TPUs were mainly responsible for training models. The Edge TPUs that are now being released are mainly responsible for “inferring” tasks using trained models.

Edge TPUs are destined to be used in corporate scenarios, not in our smartphones. For example, automated quality inspections in production plant production scenarios are well suited for Edge TPUs. Compared with the traditional method of collecting data through hardware devices and then sending them to the server for analysis through the network, there are many advantages to directly performing intelligent analysis on the object side, including the end of machine learning to ensure security, waiting and downtime. Shorter, faster decision making, etc. (These advantages are the selling points of current machine learning)

Edge TPU is the little brother of TPU, a dedicated processor used by Google to accelerate artificial intelligence computing. Other consumers can use this technology by buying Google Cloud Services.

Google is not the only company that makes such an artificial intelligence chip. ARM , Qualcomm, Mediatek and many other chip makers are doing their own artificial intelligence accelerator, Nvidia (Nvidia) of the GPU of the most famous s or market artificial intelligence chip model training.

However, Google’s advantage is to control the full-stack artificial intelligence computing hardware products and services market, and competitors have not done so. Users can store data in Google Cloud Services, train their own algorithms through TPUs, and use Edge TPUs for final object-based machine learning inference. Google will also develop machine learning software through a programming framework, TensorFlow, which is created and operated by itself .

This vertical integration of “what is our home” has obvious advantages. Google is able to ensure that the communication between each part of the system is as efficient and smooth as possible, making it easier and more convenient for users to operate in Google’s ecosystem.

“Your sensor will no longer just collect data without brains.

Injong Rhee, vice president of the Internet of Things division at Google Cloud, described the new hardware as “an ASIC chip specifically designed to run lightweight Tensorflow and machine learning models on the object side” in a blog post . Rhee said: “Edge TPUs are used to complement and improve our cloud TPU products. With it, you can use the TPU to accelerate the training of machine learning models in the cloud, and then the devices on the object can be lightweight and fast. Machine learning decision-making capabilities. Your sensors are not just a data acquisition terminal, they can also be localized, real-time intelligent decision-making tasks.”

Interestingly, Google also offers Edge TPU as a development kit to consumers, so consumers can easily test hardware performance and know if Edge TPUs are right for their product. The kit includes a SOM (System on module, modular system), SOM in including the Edge TPU chip, there is a NXP of the CPU , a compact safety unit. SOM is also equipped with Wi-Fi Communication. SOM can be connected to our computer via USB or PCI-E. This development kit is still in beta and requires user application to try it out.

In Google’s release news, one thing seems to be inconspicuous, but it is very intriguing. According to Google’s temper, the public is generally not allowed to get involved in its artificial intelligence hardware. But if Google wants consumers to really use this technology, then it is necessary to let everyone try it first, instead of relying on Google to make everyone believe that “Google Dafa is good” and enter the embrace of Google’s artificial intelligence family. ( AI Google Sphere). This development board is not only to attract peers, it also shows Google’s heart and serious attitude towards the entire AI computing product and service market.