Hands-On Guide To Different Tokenization Methods In NLP

Tokenization is the process by which large amount of text is partitioned into smaller parts called tokens.

Natural language processing is used for building applications example Text classification, intelligent chatbot, sentimental analysis, language translation, and so forth. It becomes essential to understand the pattern in the text to accomplish the above-stated purpose. These tokens are very useful for finding such patterns just as is considered as a base step for stemming and lemmatization.

In this article, we will begin with the first step of data-preprocessing i.e Tokenization. Further, we will actualize different techniques in python to perform tokenization of text data.

Tokenize Words Using NLTK

Let’s begin with the tokenization of words using the NLTK library. It breaks the given string and returns a list of strings by the white determined separator.

Example 1:

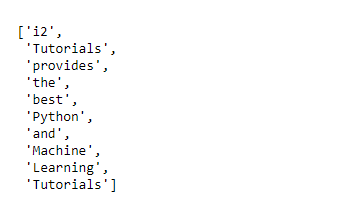

#Tokenize words from nltk.tokenize import word_tokenize text = "i2 Tutorials provides the best Python and Machine Learning Tutorials." word_tokenize(text)

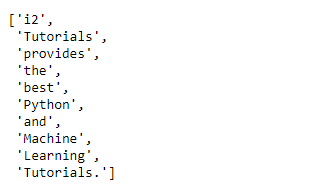

Output:

Here, we tokenize the sentences rather than words by a full stop (.) separator.

Example 2:

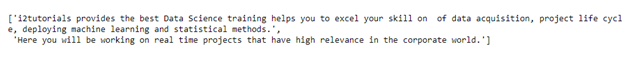

#Tokenize Sentence from nltk.tokenize import sent_tokenize text = "i2tutorials provides the best Data Science training helps you to excel your skill on of data acquisition, project life cycle, deploying machine learning and statistical methods. Here you will be working on real time projects that have high relevance in the corporate world." sent_tokenize(text)

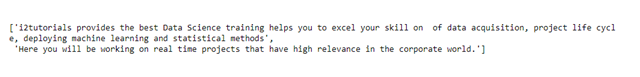

Output:

Regular Expression

Regex function is used to match or find strings using a sequence of patterns comprising of letters and numbers. We will use re library to tokenize words and sentences of a paragraph.

Example 1:

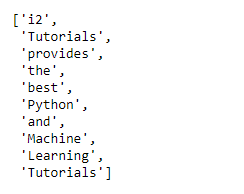

from nltk.tokenize import RegexpTokenizer

tokenizer = RegexpTokenizer("[\w']+")

text = "i2 Tutorials provides the best Python and Machine Learning Tutorials."

tokenizer.tokenize(text)

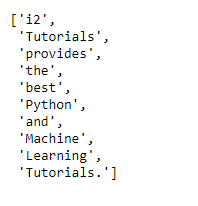

Output:

Example 2:

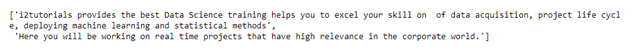

#Split Sentences

import re

text = """i2tutorials provides the best Data Science training helps you to excel your skill on of data acquisition, project life cycle, deploying machine learning and statistical methods. Here you will be working on real time projects that have high relevance in the corporate world."""

sentences = re.compile('[.!?] ').split(text)

sentences

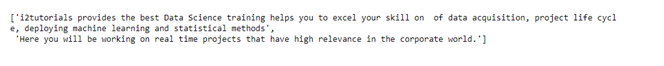

Output:

Split()

split() method is utilized to break the given string in a sentence and return a list of strings by the stated separator.

Example 1:

text = """i2 Tutorials provides the best Python and Machine Learning Tutorials.""" # Splits at space text.split()

Output:

Example 2:

#Split Sentences

import re

text = """i2tutorials provides the best Data Science training helps you to excel your skill on of data acquisition, project life cycle, deploying machine learning and statistical methods. Here you will be working on real time projects that have high relevance in the corporate world."""

sentences = re.compile('[.!?] ').split(text)

sentences

Output:

Spacy

Spacy is an open-source library used for tokenization of words and sentences.

Example 1:

import spacy

import en_core_web_sm

sp = spacy.load('en_core_web_sm')

sentence = sp(u'i2 Tutorials provides the best Python and Machine Learning Tutorials.')

print(sentence)

L=[]

for word in sentence:

L.append(word)

L

Output:

Example 2:

Here we tokenize sentences.

#Split Sentences sentence = sp(u'i2tutorials provides the best Data Science training helps you to excel your skill on of data acquisition, project life cycle, deploying machine learning and statistical methods. Here you will be working on real time projects that have high relevance in the corporate world.') print(sentence)x = []for sent in sentence.sents: x.append(sent.text) x

Output:

Gensim

The last technique that we are covering in this article is gensim. It is an open-source python library for topic modelling and similarity retrieval of large datasets.

Example 1:

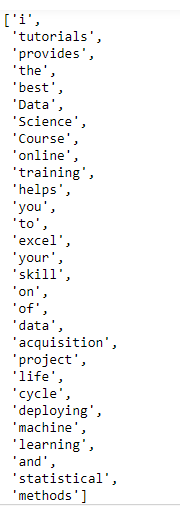

from gensim.utils import tokenize text = """i2 tutorials provides the best Data Science Course online training helps you to excel your skill on of data acquisition, project life cycle, deploying machine learning and statistical methods.""" list(tokenize(text))

Output:

Example 2:

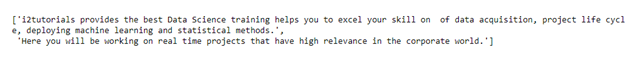

#Split Sentence from gensim.summarization.textcleaner import split_sentences text = """i2tutorials provides the best Data Science training helps you to excel your skill on of data acquisition, project life cycle, deploying machine learning and statistical methods. Here you will be working on real time projects that have high relevance in the corporate world.""" split1 = split_sentences(text) split1

Output:

Tokenization is a vital step in data cleaning/pre-processing process. In this article, we executed different methods of tokenization from a given text.