History of Neural Networks

The unsurprising idea of neural networks model is how neurons in the brain function.

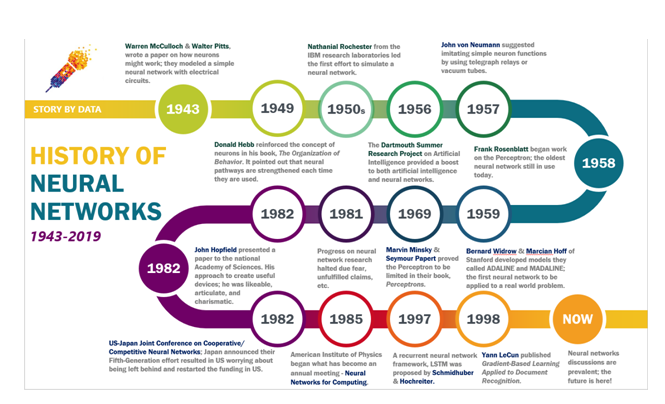

1943-When Walter Pitts a young mathematician and Warren McCulloch, a neurophysiologist wrote a paper on how neurons might work. They exhibited a simple neural network with electrical circuits.

1949- Donald Hebb took the idea further of neurons in his book, The Organization of Behavior proposing that neural pathways are strengthened each time they are used.

1950s-The first effort to simulate a neural network was taken by the Nathanial Rochester from the IBM research laboratories.

1956- Dartmouth Summer Research Project on Artificial Intelligence provided a boost to both artificial intelligence and neural networks. This encouraged research in AI and in the much lower level neural processing part of the brain.

1957- John von Neumann used telegraph relays or vacuum tubes for imitating simple neuron functions.

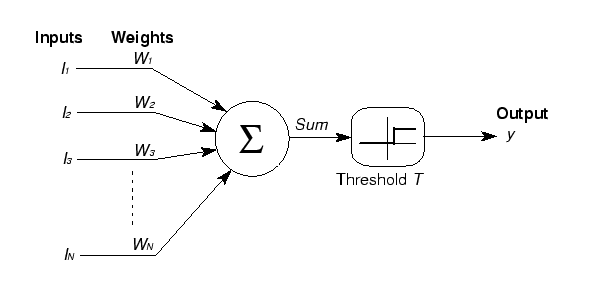

1958- Frank Rosenblatt, a neuro-biologist of Cornell, began work on the Perceptron. When trying to understand and quantify this process, he anticipated the idea of a Perceptron in 1958, calling it Mark I Perceptron. The Perceptron was built in hardware and it was a system with a simple input output association.It was modeled on a McCulloch-Pitts neuron, a logician to explain the complex decision processes in a brain using a linear threshold gate. A McCulloch-Pitts neuron model takes in inputs, takes a weighted sum and returns ‘0’ if the result is less than the threshold and ‘1’ otherwise.

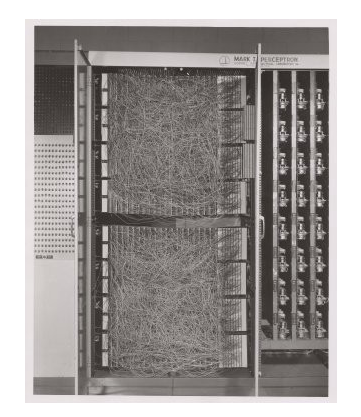

The Mark I Perceptron lays in the element that its weights would be ‘learnt’ through sequentially passed inputs and minimalizing the difference between desired and actual output.

First known implementation of a Mark I Perceptron. To produce a 400-pixel image the machine was linked to a camera that used 20×20 cadmium sulfide photocells. The important feature is a patchboard that allowed experimentation with diverse groupings of input features. To the right of that are arrays of potentiometers that applied the adaptive weights.

1959-Marcian Hoff and Bernard Widrow of Stanford built models called ADALINE and MADALINE and named after their use of Multiple ADAptive LINear Elements. MADALINE was built especially in an adaptive filter which eliminates echoes on phone lines. This neural network is still in commercial use.

It was proved by Marvin Minsky & Seymour Papert that the Perceptron to be limited in their book, Perceptrons.

1981- With due fear, unfulfilled claims the progress on neural network research halted. This led respected voices to critique the neural network research which halt much of the funding. This period of stunted growth lasted through 1981.

1982 — John Hopfield presented a paper to the national Academy of Sciences. His style to create useful devices like able, articulate, and charismatic. Japan during their US-Japan Joint Conference on Cooperative/ Competitive Neural Networks announced in their Fifth-Generation effort resulted US worrying about being left late. Soon funding was flown once again.

1985 — American Institute of Physics began an annual meeting — Neural Networks for Computing.

1987- By this time the Institute of Electrical and Electronic Engineer’s (IEEE) first International Conference on Neural Networks drew more than 1,800 attendees.

1997 — A recurrent neural network framework, Long Short-Term Memory (LSTM) was suggested by Schmidhuber & Hochreiter.

In 1998,Gradient-Based Learning Applied to Document Recognition was published by Yann LeCun .

Many more steps have been taken to get us to where we are now; today, neural networks discussions are predominant; the future is here! Presently utmost neural network development is simply proving that the principal works.