How do you implement Image Translation in Computer Vision?

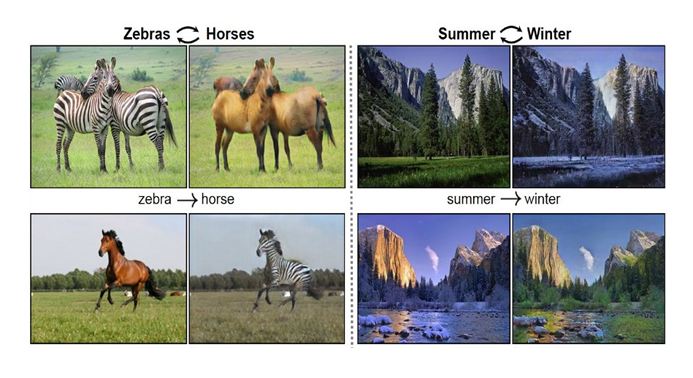

Image-to-image translation is a class of vision and graphics problems where the goal is to learn the mapping between an input image and an output image. It can be applied to a wide range of applications, such as collection style transfer, object transfiguration, season transfer and photo enhancement. The goal is to learn a mapping G : X → Y such that the distribution of images from G(X) is indistinguishable from the distribution Y using an adversarial loss. Because this mapping is highly under-constrained, we couple it with an inverse mapping F : Y → X and introduce a cycle consistency loss to enforce F(G(X)) ≈ X (and vice versa).

Paired training data consists of training examples have one to one correspondence. Unpaired training set has no such correspondence. The model contains two mapping functions G : X → Y and F : Y → X , and associated adversarial discriminators DY and DX . DY encourages G to translate X into outputs indistinguishable from domain Y, and vice versa for DX, F, and X. To further regularize the mappings, they introduce two “cycle consistency losses” that capture the intuition that if we translate from one domain to the other and back again, we should arrive where we started.

Existing image to image translation approaches have limited scalability and robustness in handling more than two domains, since different models should be built independently for every pair of image domain. StarGAN is a novel and scalable approach that can perform image-to-image translations for multiple domains using only a single model.