Introduction to Neural Networks

As per Wikipedia “Neural network is a network or circuit of neurons, composed of artificial neurons or nodes.”

A Neural Network in case of Artificial Neurons is called Artificial Neural Network, can also be called as Simulated Neural Network. Artificial neural network is a network which can solve Artificial intelligence problems. Neural Networks are Decision Making Tools, which can be used to model complex relationships between input and output.

Types of Neural Networks:

Feed Forward Neural Network

Radial Basis Function Neural Network

Kohonen Self Organizing Neural Network

Recurrent Neural Network

Convolution Neural Network

Modular Neural Network

Perceptron:

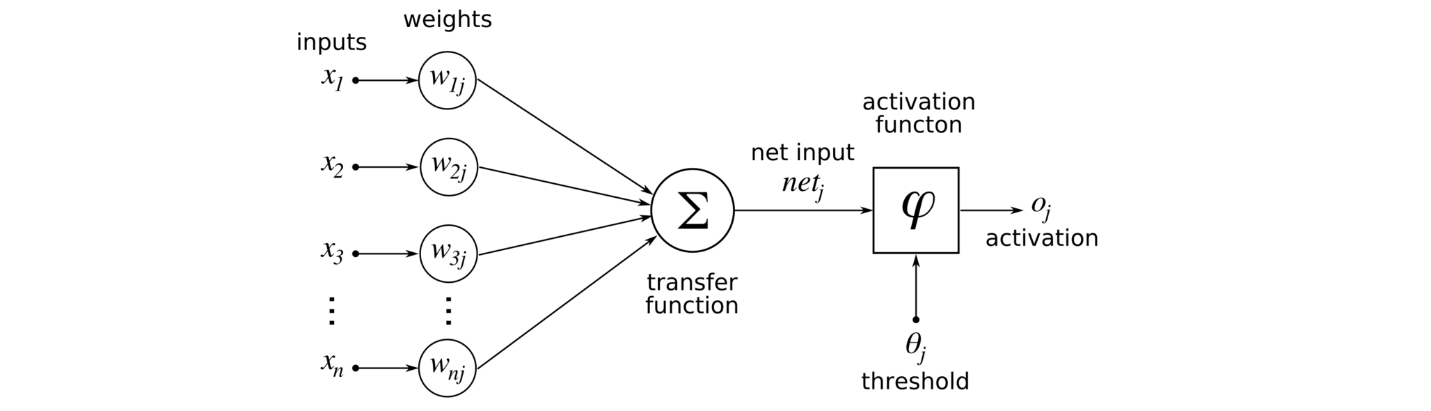

Perceptron is an Artificial Neuron which takes several Binary Inputs and produces Single Binary Output. Output of Perceptron is based on Threshold. Threshold is a real number parameter of Neuron.

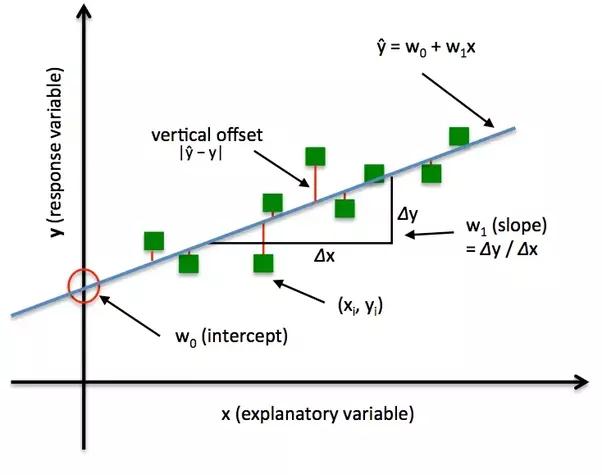

Where w is weight, x is input.

Perceptron can weigh up different kinds of evidence in order to make decisions. Many layers Network of Perceptron can engage in a sophisticated Decision Making.

Perceptron Bias= -(Threshold)

For a Perceptron with a really big Bias, it is easy to get Output 1, If Bias is Negative it is very difficult to get Output 1. Perceptron can also be used to compute Elementary Logic Functions such as AND, OR, NAND, NOR.

Sigmoid Neurons:

Sigmoid Neurons are similar to Perceptron. But modified so that small change in Weights and Bias cause only small change in their Output. Sigmoid Neuron can take input of any Real Number from 0 to 1. Output of a Sigmoid Function is also neither 0 nor 1. Output is the Sigmoid Function.

When Z=W*X+b is large and positive,Output of Sigmoid Neuron is approximately 1, If Z=W*X+b is Negative, Output is approximately 0.

Sigmoid Neurons have much same qualitative behaviour as Perceptron, they made it much easier to figure out how change in Weights and Bias leads a change in the Output.

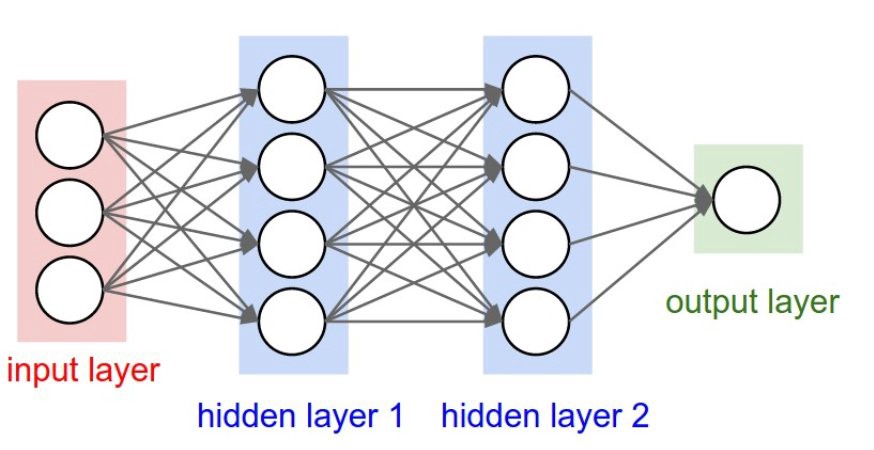

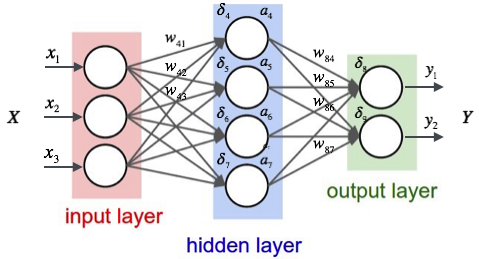

Multiple layer Network is also called as MultilayerPerceptron(MLP).

Feed Forward Neural Network:

Neural Network where the Output from one layer is given as Input to Next layer is called Feed Forward Neural Network. In this Network, Input travels in One direction. This Neural Network may or may not have Hidden Layers. It has only Front Propagation and No Back Propagation.

Application of Feed Forward Neural Network is Found in Speech Recognition and Computer Vision where classifying the Target classes are complicated. These kinds of Neural Networks are responsible for Noise data and easy to maintain.

Radial Basis Function Neural Network:

Radial Basis Function Neural Network consider distance of a point with respect to center. It has two layers.

1. Features are combined with the Radial Basis Function in the inner layers

2. Output of these Features are taken into consideration while computing the same output in the next time-step which is basically a memory.

This Neural Network have been applied in Power Restoration System. Power system have more Size and Complexity.

Kohonen Self Organizing Neural Network:

The objective of a Kohonen map is to input vectors of arbitrary dimension to discrete map comprised of neurons. The map needs to me trained to create its own organization of the training data. It comprises of either one or two dimensions. When training the map, the location of the neuron remains constant but the weights differ depending on the value. This Self Organization process has different parts, in the first phase every neuron value is initialized with a small weight and the input vector. In the second phase, the neuron closest to the point is the ‘winning neuron’ and the neurons connected to the winning neuron will also move towards the point like in the graphic below. The distance between the point and the neurons is calculated by the Euclidean distance, the neuron with the least distance wins. Through the iterations, all the points are clustered and each neuron represents each kind of cluster. This is the gist behind the organization of Kohonen Neural Network.

Kohonen Neural Networks are used to recognize patterns in the Data. Its applications are found in Medical analysis to cluster Data into different categories.

Recurrent Neural Network:

Recurrent Neural networks feeds the Output of the layer to Input in order to predict the Outcome of the layer. In this Neural Network, Feedback Lops are possible. Recurrent Neural Networks are having been less influential when compared to Feed Forward Neural Networks.

Each Neuron acts like Memory cell while performing Computations. If the prediction is wrong, we use the learning rate or error correction to make small changes so that it will gradually work towards making the right prediction during the propagation.

Application of Recurrent Neural Networks found in Text to Speech conversion models.

Convolutional Neural Network:

Convolutional Networks are similar to that of Feed Forward Neural Network where the Perceptron have learnable Weights and Bias. Computer Vision techniques are dominated with Convolutional Neural Network because of its accuracy in Image Classifications.

In this Neural Network, Input features are taken in batch wise like filter. This will help the Network to remember the parts of Images while computing the operations. These computations involve in the operation of conversion of RBG scale, HSI scale to Gray scale.

Its application has been Signal and Image Processing which takes over Open CV in field of Computer Vision.

Modular Neural Network:

Modulating Neural Networks are collection of different Independent Neural Network which contribute to the single Output. Each Neural Networks have separate set of inputs which are unique. These Networks does not interact while accomplishing the tasks. Its advantage is it reduces the complexity by splitting the large computational process into small components. Processing Time depends upon the number of neurons and their involvement in computing the results.

Applications:

Artificial Neural Networks have been applied successfully to Speech Recognition, Image Analysis, Adaptive Control, Text to Speech Recognition models, Medical Analysis, Power Restoration Systems, Computer Vision.

Conclusion:

Artificial Neural Networks have the ability to learn non linear and complex relationships, which is really important because, in real life Inputs and Outputs have non linear as well as complex relationships.

ANN can infer unseen relationships on unseen data as well, thus model can generalize and predict the unseen data.