Machine Learning Room That Can Learn Human Behaviour And Change Shape In Response

Introduction:

Joining machine learning and dynamic engineering, a group of computational plan scholars in Australia have built up an interesting origami-style meeting room that can learn human conduct and change shape in reactions of the response of individuals.

The intelligent ‘Centaur Pod’ is set to adjust to outer natural and human upgrades by uneven and changing its shape, said Hank Haeusler, Associate Professor at the University of New South Wales in Australia.

Working:

“Right now, a human can be in identical space with a robot and can cooperate in the space with the robot, yet what we need to do is make space itself turn into the robot,” Haeusler said.

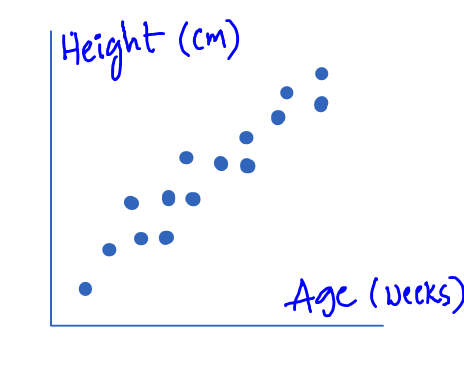

“Along these lines when a man in a building moves, acts or works in any capacity, the ‘robot’ will detect this conduct and begins gaining from this conduct and other individuals’ conduct and will make information from the conduct and the learning will make an interpretation of into the space to transform,” he noted.

The roughly six-to-nine square meter structure investigates three fundamental zones, machine learning and man-made brain power; advanced creation and robot manufacture; and expanded reality and virtual reality.

This will significantly change the manner in which planners configure, create and produce later on, the scientists said.

“We attempt to push however much as could be expected the limits of traditional engineering and outline, and investigate what machine realizing, biomimicry or inventive mechanical technology bring to the table for spatial plan to utilize this learning as seed to create compositional plan ventures,” Haeusler said.

“We’re taking a gander at how computerized creation and robot manufacture could shape the manner in which we fabricate structures or structures,” he noted.

The structure, being created in a joint effort with Arup — the designing firm behind the Sydney Opera House — will be developed one year from now.

Conclusion:

In an ongoing exploration venture, Google started to comprehend the regular communication examples of individuals by giving man-made reasoning AI a chance to start to take in the physical developments of human collaborations through its YouTube content.

As indicated by Google, the nuclear perception activity information learning model called AVA (nuclear visual activities) enables the computerized reasoning framework to additionally learn and comprehend the practices displayed in human characteristic connections, for example, strolling, embracing or shaking hands. Produces regular developments.