MongoDB backup and recovery strategies

A database backup is just as important as the database itself, especially after a technical hitch that may destroy the database. Usually, a backup is simply a replication of the database’s contents at that time, which can be used to restore the database.

The data is always available for business restoration in the event of a disaster with a proper backup strategy. Taking a proactive approach towards safeguarding data can be a government regulatory and compliance requirement.

A regular backup can be used to set up development and staging environments without impacting the production setup, thus enabling faster development and smoother launches.

There are a number of backup considerations to consider:

- Flexibility: Can you backup only changed data or can you select what is useful.

- Recovery Point Objective (RPO): In this step, you define how much data you want to recover and how much you are willing to lose.

- Recovery Time Objective (RTO): This defines how fast you want the recovery process. It will take longer if there is a lot of data

- Write the backup to what medium

- What frequency will backups be taken and how to avoid large downtimes for connected applications.

- Using a scheduled tool or manually performing backups?

- Costs associated with backup

- The size of the backup files. This will result in another storage cost, so you should be able to compress the backup files if they are too large.

- When dealing with MongoDB shards, the backup strategy is complex

- Isolation: How can the backup files be affected by the location of the database both physically and logically. As an example, if the backups are located in close proximity to the database, they are likely to be destroyed at the same time as the database

MongoDB Backup Strategies

MongoDB backup strategies differ based on servers, data, and application constraints. MongoDB backup strategies include:

- Mongodump

- Copying the Underlying Files

- MongoDB Management Service (MMS)

For MySQL, backups consist of .sql files, whereas for MongoDB, they consist of a complete folder structure in order to maintain the exact database structure.

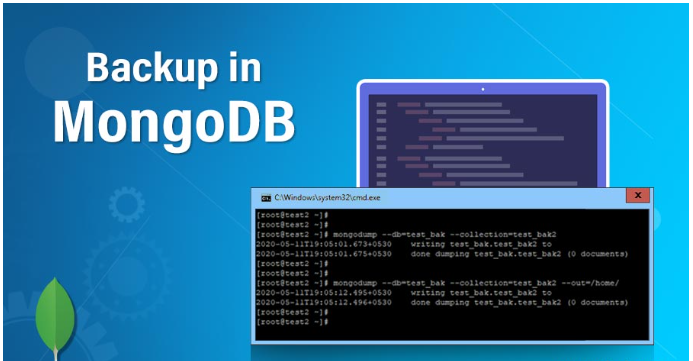

Mongodump

In MongoDB itself, there is an inbuilt tool that offers the capability of backing up data. Using Mongodump, data can be exported from MongoDB into backup files that can later be restored using Mongorestore.

Mongodump offers a lot of flexibility in backup selection. Mongodump can backup the entire database, selected collections, or results of queries. Documents that have been selected as snapshots are captured. The dumping of the operation log can produce this snapshot consistently.

It is also possible to restore the data to an operating database using the mongorestore utility provided by MongoDB. Based on these utilities, Mongodump makes it easier to backup the database with the assurance that the backed up data exists without error. Furthermore, the strategy is flexible in that backups can be filtered based on certain specific needs, such as backing up the entire database, a collection, or a query result. As a result of selecting small data, the backup up and recovery process will be relatively quick to meet the RTO consideration.

This strategy is only suitable for small to medium-sized deployments, not large ones. It becomes resource-intensive to perform a complete dump at every snapshot point for large data systems. Whenever more resources are diverted to complete this process, the database performance can be affected, especially when the backup is performed in the same datacenter as the database. You will incur some additional operational costs for your database if you are forced to add more resources. There are more cost-effective strategies such as filesystem snapshots or MongoDB Management Service.

For large database systems with shards, deployment of this strategy is more complex than for small configurations.

Running the following command on the mongo shell will perform a mongodump backup for the database xyz:

#Start mongodump --db xyz --out /var/backups/mongo #End

A number of flags are provided by Mongodump, including –archive, for compressing the output folder in order to reduce the size of the backup, e.g.

#Start mongodump --db xyz --archive=./backup/xyz.gz --gzip #end

The mongorestore command can be used to restore this database

#start #End #Start mongorestore --gzip --archive=xyz.gz --db xyz #end

With a scheduled plan, one can adjust a backup frequency to match a data recovery window based on the Recovery Point Objective

Copying the Underlying Files

By taking snapshots of the underlying data files at a specific point in time, this approach allows the database to cleanly restore itself from the state captured in the snapshot files.

In order to ensure that the snapshots are logically consistent, journaling must be enabled. It is possible to create a consistent snapshot of a database by taking a snapshot of the entire file system or by stopping all write operations to the database and copying the files using standard file system copy tools.

As this approach takes backups from the storage level, it is more efficient than mongodump. The approach is suitable for large-scale deployments, but at the expense of increased downtime for applications connected to the database.

This approach, however, is not as flexible as the mongodump approach in that you cannot target a specific database, collection, or result. Therefore, you will have to make a backup of the entire database setup at the expense of large backup files and increased downtime for applications making requests to this database during long-running backup operations.

A sharded cluster and the coordination of backups across multiple replicas make this approach more complex. In order to ensure consistency across the various setups, you will need highly skilled personnel. Ultimately, this will result in higher operating costs for your database.

MongoDB Management Service (MMS)

A fully managed service that provides continuous online backups for MongoDB is available. In the case of highly critical and frequently changing data, it is very efficient. Backup Agents are installed within the database environment to conduct basic synchronization with MongoDB’s redundant and secure data centers. A continuous backup of oplog data is then streamed to MMS via compression and encryption.

It is possible to configure a snapshot and retention policy in accordance with your requirements. By default, snapshots are taken every six hours and oplog data is retained for 24 hours. Although this approach is more coarse, it offers the flexibility of selecting which databases or collections to backup.

Taking snapshots of a sharded cluster is also relatively simple since the replica is retained for 24 hours and a point-in-time snapshot is created.

As a result, database performance is not adversely affected since the MM only reads the oplog in a similar manner to adding a new node to a replica set. This means that applications connected to this database will not experience any downtime. Due to the relatively low cost of enterprise subscriptions, this approach can generally be considered to be cost-effective.

A Comparison of MongoDB Backup Strategies

Listed below are some comparisons between the approaches described above, in order to assist you in determining which approach is most appropriate for you.

| Objective | Mongodump | Copying underlying files | MMS |

| Flexibility | Flexible so that one can backup any database, collection, or query result | This approach makes it impossible to backup a specific database or collection | One can exclude non-mission critical databases and collections. |

| Recovery Point Objective (RPO) | One can backup only the data they want due to its flexibility. | Limits RPO to snapshot moments. Database data must be backed up | Due to low overhead, frequent backups are possible. |

| Recovery Time Objective (RPO) | Having met the RPO objective, recovery time will be directly proportional to data size | Database size affects RTO. The longer it takes to backup a large database, the longer the application will be down | Since backups can meet the RPO objective, recovery time depends on data size. |

| Database performance | Suitable for small deployments, but not for large ones. | Due to the downtime, all read operations must be stopped and some data can be lost | Because backups are made from oplogs, database performance is not affected |

| Isolation | Depends on how far the backups are from production | Backup snapshots are kept away from production databases. | Backup snapshots are kept away from production databases. |

| Deployment Cost | Large deployments become more expensive. | Large deployments relatively cheaper. | Due to subscriptions based on data size, it is quite cheap for large and small deployments. |

| Maintenance Cost | Since no technical skills are required, the process is relatively cheap. | Maintaining a cluster with shards is more expensive due to its complexity | Sharded clusters are not expensive to maintain since snapshots are consistent |

| Shard Deployment | When dealing with large sets, it can be complex. | When dealing with large sets, it can be complex. | Creating a backup for a sharded cluster isn’t too complex. |