How to derive Naïve Bayes Theorem?

Naive Bayes classifier assumes that the presence of a particular feature in a class is unrelated to the presence of any other feature.

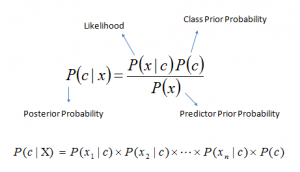

- P(c|x) is the posterior probability of class (c, target) given predictor (x, attributes).

- P(c) is the prior probability of class.

- P(x|c) is the likelihood which is the probability of predictor given class.

- P(x) is the prior probability of predictor.

Deriving Naive Bayes

Using Bayes Rule, We can break P(Y|X)P(Y|X) (called Posterior Probability) down into two parts, (without loss of generality, assuming we want to estimate the posterior for an arbitrary label yy taken from MM)

P(Y=y|X)=P(X|Y=y)×P(Y=y)P(X)P(Y=y|X)=P(X|Y=y)×P(Y=y)P(X)

P(X|Y=y)P(X|Y=y) is called the likelihood, while P(Y=y)P(Y=y) is the prior. P(X)P(X) is a normalizing factor that would be the same for each yy. In our classification rule we compare P(Y=y|X)P(Y=y|X) for every yy, so we can omit the normalizing factor entirely. Instead, we calculate the posterior,

P(Y=y|X)=P(X|Y=y)×P(Y=y)P(Y=y|X)=P(X|Y=y)×P(Y=y)

Now, consider the feature vector XX as a set of random variables (each feature is an RV), say, {x1,x2…xd}{x1,x2…xd}. Now, consider the numerator,

P(X|Y=y)×P(Y=y)=P({x1,x2…xd}|y)×P(y)=P(y,x1,x2..xd)P(X|Y=y)×P(Y=y)=P({x1,x2…xd}|y)×P(y)=P(y,x1,x2..xd)

The Naive Bayes assumption is that every variable xixi is conditionally independent of xjxj for all i≠ji≠j.

P(x1,x2..xd,y)=P(x1|x2..xd,y)×P(x2|x3..xd,y)…P(xd|y)×P(y)P(x1,x2..xd,y)=P(x1|x2..xd,y)×P(x2|x3..xd,y)…P(xd|y)×P(y)

Now, due to the naive bayes assumption,

P(xi|xi+1..xn,y)=P(xi|y)P(xi|xi+1..xn,y)=P(xi|y)

Thus, our joint probability simplifies to,

P(x1,x2..xd,y)=P(Y=y)×∏i=1dP(xi|Y=y)P(x1,x2..xd,y)=P(Y=y)×∏i=1dP(xi|Y=y)

Or, we can write our nice posterior as,

P(Y=y|X)=P(Y=y)×∏i=1dP(xi|Y=y)