Sentiment Analysis Using Python

What is sentiment analysis ?

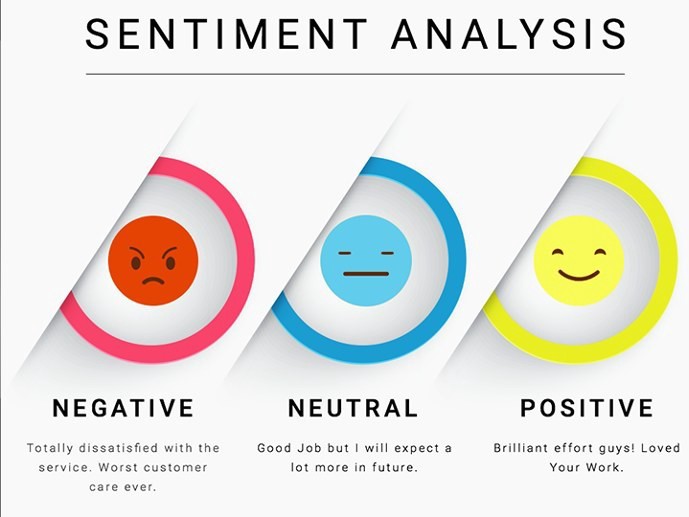

Sentiment analysis is a procedure used to determine if a piece of writing is positive, negative, or neutral. It is a type of data mining that measures people’s opinions through Natural Language Processing (NLP). A positive sentiment means users liked product movies, etc.

Negative sentiments means the user didn’t like it. Neutral sentiments means that the user doesn’t have any bias towards a product. In simple words we can say sentiment analysis is analyzing the textual data.

Why Sentiment Analysis?

There are lots of real-life situations in which sentiment analysis is used. Some examples are:

- In marketing to know how the public reacts to the product to understand the customer’s feelings towards products.How they want it to be improved etc.

- In quality assurance to detect errors in a product based on actual user experience.

- In politics to determine the views of people regarding specific situations what are they angry or happy for. It can be used to predict the election result as well.

- In risk prevention to detect if some people are being attacked or harassed, for spotting of potentially dangerous situations.

Let us try to understand it by taking a case.

The data that you update on Facebook overall activity on Facebook. we can infer many things from this data. By observing the status from your Facebook account we can infer many things.

For example, if your status was ‘Life isn’t that easy as I expected to be” its negative sentiment. Assume your status was ‘so far so good’ its sound like positive.

So, if you take data from the last month then analyze the sentiment of every status. If we assume 90% sentiments are positive then we can say that the person is very happy with his life and if 90% sentiments are negative then the person is not happy with his life.

How Does It Works?

- Tokenization:

It is the process of breaking a string into small tokens which inturn are small units.

- Break a complex sentence into words.

- understand the importance of each word with respect to the sentence

Example:

‘i2 tutorial is the best online educational platform…’

Output:

‘i2′,’tutorial’,’is’,’best’ ,’online’ ,’educational’ ,’platform’,’.’,’.’,’.’

- Cleaning the data:

Cleaning the data means removing all the special characters and stopwords. Stopwords are the commonly used words in a language. They are useless which do not add any value to things and can be removed.

Output :

‘i2’, ‘tutorial’,’ best’, ‘online ‘,’educational’,’ platform’

- Classification

In this step, we classify a word into positive, negative, or neutral.

‘i2′ ,’tutorial’ ,’best’ ,’online’ ,’educational’ ,’platform’

0 + 0 + 1 + 0 + 0 + 0

So, final score is 1 and we can say that the given statement is Positive.

In this article, we will be talking about two libraries for sentiments analysis. The first is TextBlob and the second is vaderSentiment.

Textblob is NPL library to use it you will need to install it.

pip install textblobyou can do things like detect language, Lable parts of speech translate to other language tokenize, and many more.

The textblob’s sentiment property returns a sentiment object .The polarity indicates sentiment with a value from -1.0(negative) to 1.0(positive) with 0.0 being neutral .The subjectivity is a value

from 0.0 (objective) to 1.0 (subjective)

from textblob import textblob

sent=textblob("I love this movie")

output =sentiment (polarity=0.5; subjectivity=0.6)

sent=textblob ("movie was boring")

output:

sentiment (polarity=-1.0, subjectivity=1.0)

Now coming to vadersentiment, you have to install it.

pip install vadersentimentVADER stands for Valance Aware Dictionary and Sentiment Reasonar.

from vaderSentiment.vaderSentiment import SentimentInstensity Analyzer

analyzer=SentimentIntensityAnalyzer()

sent=analyzer.polary_scores("I love this movie")

print(sent)

output:

{‘neg’=0.0,’neu’=0.417,’pos’=0.583,’compount’:0.6369}

Here neg is negative, neu is neutral, pos is positive and the compound is computed by summing the valance score of each word in the lexicon, adjusted according to rules, the normalized.

positive sentiment:compound score.=0.5

neutral sentiment :(compound score>-0.5)and (compound score<0.5)

negative sentiment: compound score <=-0.5