FAST AND BIG makes machine learning a valuable resource

The digital evolution period has arrived as data has turned into a current cash whose esteem is exposed by examination. Examination reveals patterns, trends and affiliations that convey important bits of knowledge, new associations and exact forecasts that can enable organizations to accomplish better results. Data is not any more just produced from conventional PC applications, yet now it originates from IoT gadgets and mobile, associated and independent vehicles, machine sensors, human services screens and wearables, video observation, and the rundown is endless. It’s not just about putting away data any more, but rather catching, protecting, getting to and changing it to exploit the conceivable outcomes it has and the esteem and insight it can convey.

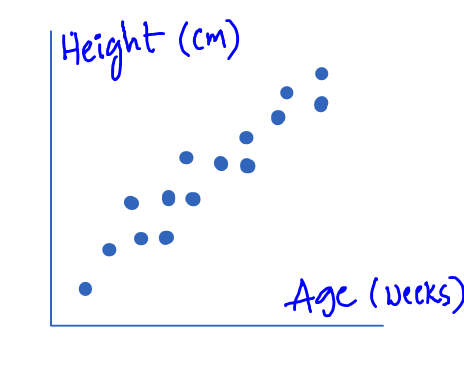

Current business are understanding the estimation of huge data particularly when matched with smart robotization models energized by machine learning (ML). These models are moving from the center server farm ideal to the edge gadget itself where insightful basic leadership and time-to-activity are driving better client encounters and operational efficiencies. The objective is basic: settle on quicker and more prescient choices.

Restore and Activate Your Tape Archives:

To investigate enormous data, it must be caught and put away on solid and available media, for quick access as well as to approve it is of the most noteworthy respectability and precision conceivable. Old data, when collected with new data, may really be the most significant data for machine learning since a wealth of put away data is expected to effectively run and prepare expository models, and approve unwavering quality for generation purposes. Filing data isn’t tied in with recouping datasets, but instead protecting them and having the capacity to get to them effortlessly utilizing inquiry and file methods.

Customary tape storage techniques are ineffectual as tape falls apart after some time, can be hard to discover, and data extraction may require inheritance hardware without working guidelines. This doesn’t imply that tape ought to be disposed of, on the grounds that it is feasible for reinforcement, yet it requires that different duplicates be made on the off chance that data reclamation or tape debasement issues happen. No less than one duplicate of data ‘must’ be on online media if an investigation is required.

Merge Your Storage:

To accumulate new bits of knowledge, esteem extraction shows signs of improvement after some time as more data focuses are gathered and the genuine esteem happens when diverse data resources from an assortment of sources are related together. In an associated auto, for instance, consolidating video film from outside cameras with motor diagnostics or radar data could convey a superior driving knowledge and even spare lives.

Accordingly, there is nothing unexpected that organizations are concentrating on uniting their advantages into a solitary scale-out capacity engineering that backings petabyte-scale and the capacity to associate various applications to similar data in the meantime. On-premises protest stockpiling or distributed storage frameworks fill an awesome need for these conditions as they are intended to scale and bolster custom data designs.

Spotlight on Metadata:

Acquiring an incentive from huge data is extremely about the metadata. Metadata extraction and the found relationships between those metadata experiences are the establishment of ML models. Once a model is adequately prepared, it very well may be put into generation and help convey quicker choices in the field, regardless of whether at the edge, (for example, an auto) or in the cloud, (for example, a web-scale application).

Crosswise over enterprises and business forms, boss data officers (CDOs), data researchers and examiners are assuming a more noticeable job in mapping the factual noteworthiness of key issues and make an interpretation of the examination rapidly to execute into the business.

Last thoughts:

Organizations require a considerable measure of old and new data to effectively run and prepare the scientific models that give important bits of data and brilliant chunks of insight, and be solid enough to be conveyed inside a creation domain. When we say enormous, we are discussing petabytes of limit (not terabytes), with a capacity to scale it up immediately and be sufficiently adaptable to ingest record and question based data. When we examine quick, we are not just looking at accelerating data catch at the edge yet in addition accelerating data in the center by rapidly bolstering the GPUs that break down and prepare the models. In this way, for machine figuring out how to be a significant asset, to go quick, you need to go enormous first!