Top 200 Deep Learning interview questions and answers

1. What is Neural Network?

Ans: A Neural Network is a network of neurons which are interconnected to accomplish a task. Artificial Neural Networks are developed by taking the reference of Human brain system consisting of Neurons. In Artificial Neural Networks perceptron are made which resemble neuron in Human Nervous System.

2. What are different types of Neural Networks?

Ans: (a)Feed Forward Neural Network:

In a feed forward neural network, the data passes through the different input nodes till it reaches the output node.This is also known as a front propagated wave which is usually achieved by using a classifying activation function. Learn more>>>

3. What are different types of Layers in an Artificial Neural Networks?

Ans: Basically, there are 3 different types of layers in a neural network: Input Layer is a layer where all the inputs are fed to the Neural Network or model. Hidden Layers are the layers which are in between input and output layers which are used for processing inputs. A Neural Network can have more than one Hidden layer. Learn more>>>

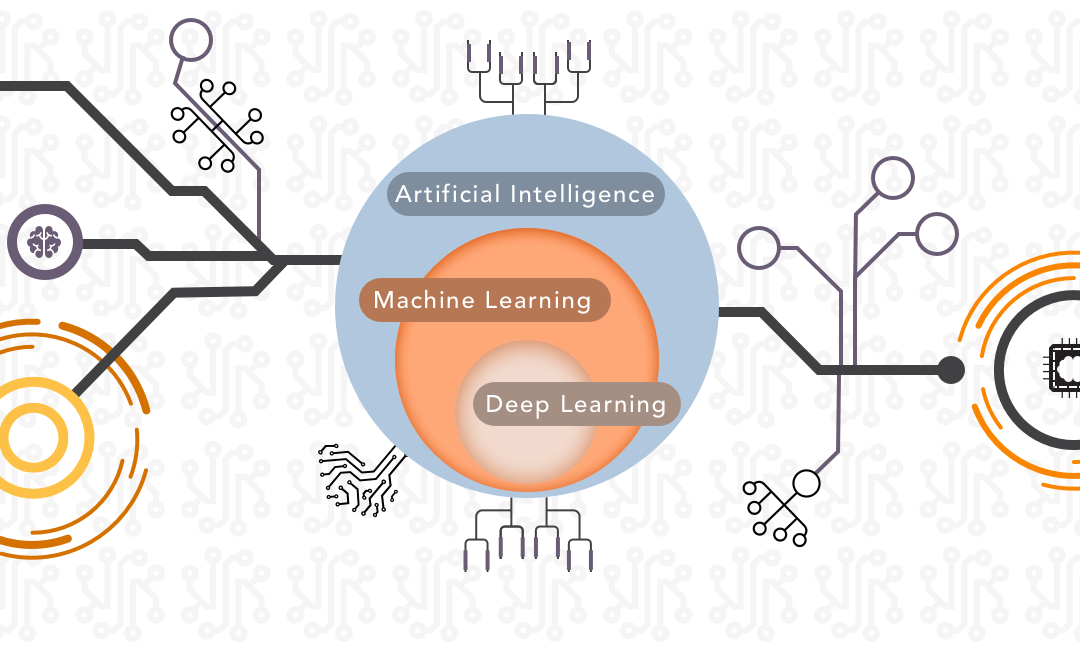

4. What is the difference between Deep Learning and Machine Learning?

Ans: Machine Learning is all about algorithms that analyses data, learn from the data, and then apply what they’ve learned to make informed decisions.Deep Learning is a type of machine learning that is inspired by the structure of the human brain. Learn more>>>

5. What is Artificial Intelligence?

Ans: Artificial intelligence (AI) is an area of computer science that highlights the creation of intelligent machines that work and react like humans. Artificial intelligence is based on the principle that human intelligence. Learn more>>>

6. How can we compare the Neural Network with Human Brain?

Ans: Artificial Intelligence is developed by taking the inspiration from the Human Nervous system. But it is not exactly similar to Human Brain Nervous system, which is very complex in nature when compared to ANN. Learn more>>>

7. What do you mean by Single Layer Perceptron?

Ans: Single layer perceptron is a simple Neural Network which contains only one layer. The single layer computation of perceptron is the calculation of sum of input vector with the value multiplied by corresponding vector weight. The displayed output value will be the input of an activation function. Learn more>>>

8. Difference between Single Layer vs Multilayer Perceptron?

Ans: (a)Single-layer Perceptron

Single Layer Perceptron has just two layers of input and output. It only has single layer hence the name single layer perceptron. It does not contain Hidden Layers as that of Multilayer perceptron. Learn more>>>

9. What do you mean by sigmoid Neurons?

Ans: A sigmoid neuron is similar to the perceptron neuron. A sigmoid neuron can handle more variation in values than what you get with a perceptron. In fact, you can put any number you want in a sigmoid neuron and then use a sigmoid function to squeeze that number into something between zero and one. Learn more>>>

10. Explain Deep Neural network and Shallow neural networks?

Ans: Shallow neural networks give us basic idea about deep neural network which consist of only 1 or 2 hidden layers. Understanding a shallow neural network gives us an understanding into what exactly is going on inside a deep neural network . Learn more>>>

11. Exploring ‘OR’, ‘XOR’,’AND’ gate in Neural Network?

Ans: AND Gate From our knowledge of logic gates, we know that an AND logic table is given by the diagram belowFirst, we need to understand that the output of an AND gate is 1 only if both inputs are 1. Learn more>>>

12. What do you mean by Activation Function in Neural Network? List them out?

Ans: It is a Function used in Neural Network in order to calculate weighted sum and add bias with it. It also introduces non-linearity into output of a Neuron. Back Propagation is possible in Neural network by using Activation Function since the gradients are provided along with error to update the weights and biases. Learn more>>>

13. Explain brief about Logistic or Sigmoid Activation Function?

Ans: Sigmoid is an activation function which is in S shape curve. It takes real value as an input and gives the output which is in between 0 and 1. It is non-linear in nature; it is continuously differentiable and has fixed output range of values. It gives a continuous form of output unlike Step function. It has smooth gradient. Learn more>>>

14. Explain brief about Hyperbolic Tangent or Tanh?

Ans: The Activation Function which is better than Sigmoid Function is Tanh function which is also called as Hyperbolic Tangent Activation Function. Similar to Sigmoid Function it is also have simple Derivative function. Range of values of Tanh function is from -1 to +1. It is of S shape with Zero centered curve. Learn more>>>

15. Explain brief about ReLU (Rectified Linear Unit)?

Ans: ReLU is the short form of Rectified Linear Activation Unit Function which is most widely used in Neural Network. It is mainly implemented in Hidden layers of Neural Network. For Zero inputs and Negative inputs it will give output as Zero where as for positive input it will give the same positive output. Learn more>>>

16. Explain brief about Step / Threshold Activation Function?

Ans: Step Activation function is also called as Binary Step Function as it produces binary output which means only 0 and 1. In this Function we have Threshold value. Where the input is greater than Threshold value it will give output 1 otherwise it is 0. Hence it is also called as Threshold Activation Function. Learn more>>>

17. Explain brief about Leaky ReLU Activation Function?

Ans: Leaky ReLU is the extension to the ReLU Activation Function which overcomes the Dying problem of ReLU Function. Dying ReLU problem is becomes inactive for any input provided to it, due to which the performance of Neural Network gets effected. Learn more>>>

18. Explain brief about Softmax Activation Function?

Ans: Softmax Function is mostly used in a final layer of Neural Network. Softmax Function not only maps our output function but also maps each output in such a way that the summation is equals to 1. In simple this function calculates the probability distribution of the events where these calculated probabilities determine the target class for the given inputs. Learn more>>>

19. What is the difference between Sigmoid and Softmax Function?

Ans: Sigmoid Function is used for Two class Logistic Regression. Sum of Probabilities need not to be 1. It is used as Activation Function while building Neural Networks. The high value will have the high probability but it need not to be the highest probability. Learn more>>>

20. What are all Zero centered activation Functions, Explain?

Ans: Tanh or Hyperbolic Tangent Function is the Zero centered Activation Function. Range of values of Tanh Function is from -1 to +1 whose mid value is 0. Learn more>>>

21. Explain Back Propagation in Neural Network?

Ans: Back Propagation is one of the types of Neural Network. Here nodes are connected in loops that is output of a particular node is connected to the previous node in order to reduce error at the output. This loop may take number of iterations. Learn more>>>

.

22. Explain Biases and Weights in Neural Network?

Ans: Bias is the Pre-assumption in a model. Bias is like the intercept added in a linear equation. It is an additional parameter which is used to adjust the output along with the weighted sum of the inputs to the neuron.Weight is the steepness of the linear function. Learn more>>>

23. How do Neural Networks update weights and Biases during Back Propagation?

Ans. To reduce the error by changing the values of weights and biases.calculate the rate of change of error w.r.t change in weight.Since we are propagating backwards, first thing we need to do is, calculate the change in total errors w.r.t the output O1 and O2. Learn more>>>

24. What are the different functions that we use in Keras to build basic Neural Network?

Ans: Different Activation functions are used in Keras in order to build Neural Network. ReLU (Rectified linear unit) is used in first two layers. Sigmoid Function is used at Output layer. We use a sigmoid on the output layer to ensure our network output is between 0 and 1. Tanh function can be used at every layer. Learn more>>>

25. What do you mean by dense layer in Keras Neural Network?

Ans: Dense Layer is regular layer of neurons in Neural Network. Each neuron receives input from all previous neurons. Hence it forms Dense Layer. This layer represents matrix vector multiplication. The values in this matrix are trainable parameters which gets updated during Back Propagation. Dense layer is also used to change the values of matrix. Learn more>>>

26. What do you mean by Drop out layer in Keras Neural Network?

Ans: A dropout layer is used for regularization where you can randomly set some of the dimensions of your input vector to be zero. A dropout layer does not have any trainable parameters, they can be updated during training. Learn more>>>

27. Why we use Softmax function only at the end of the Neural Network?

Ans: Softmax Function almost work like max layer that is output is either 0 or 1 for a single output node. It is also differentiable to train by gradient descent. Summation of all output will be always equal to 1. The high value of output will have highest probability than others. Learn more>>>

28. What do you mean by Epoch, Batch, Iterations in a Neural Network?

Ans: Epoch: In real time we have very large datasets which we cannot feed to the computer all at once. Which means the entire Dataset is to be divided in order to feed to the Neural Network. Epoch is when the complete dataset is passed forward and backward through the Neural Network only once. Learn more>>>

29. What are the different Gradient Descent implementations/ methods?

Ans: Batch Gradient Descent: It processes all the training examples for each iteration of gradient descent. If the number of training examples is large, then batch gradient descent is very expensive. So, in case of large training examples we prefer to use stochastic gradient descent or mini-batch gradient descent. Learn more>>>

30. What are the different Loss functions that we generally use for Neural Networks?

Ans: There are no specific loss functions used for Neural Networks. In general, we use Classification Loss Functions in Neural Networks. Mean square error is measured as the average of squared difference between predictions and actual observations. It’s only concerned with the average magnitude of error irrespective of their direction. Learn more>>>

31. What is the difference between sparse categorical cross entropy and categorical entropy?

Ans: For both sparse categorical cross entropy and categorical cross entropy have same loss functions but only difference is the format. The usage entirely depends on loading the dataset. Advantage of using sparse categorical cross entropy is, it saves time in memory as well as speed up the computation process. Learn more>>>

32. What are the different optimization algorithms that we generally use in Neural Network?

Ans: Optimizers are algorithms or methods used to change the attributes of your neural network such as weights and learning rate in order to reduce the losses. There are different types of optimizers, let us see in detail. Learn more>>>

33. Explain brief about Mini Batch Gradient Descent?

Ans: Mini-batch gradient descent is an extension of the gradient descent algorithm. Mini batch gradient descent splits the training dataset into small batches which are used to calculate model error and update model coefficients. Learn more>>>

34. How Gradient Descent is going to help to minimize loss functions?

Ans: Gradient descent is an optimization algorithm used to minimize loss function by iteratively moving in the direction of steepest descent that is lowest point in the graph as defined by the negative of the gradient. Our first step is to move towards the down direction specified Learn more>>>

35. What do you mean by RMS Prop?

Ans: RMS Prop is an optimization technique which is not published yet used for Neural Networks. To know about RMS Prop, we need to know about R prop. R Prop algorithm is used for full batch optimization. It tries to resolve the problem of the gradients with variable magnitudes. Learn more>>>

36. Define Momentum in Gradient Descent?

Ans: Generally, in Deep Neural Networks we train the noisy data. To reduce the effect of noise when the data is fed to the batches during optimization. This problem can be solved using exponentially weighted averages. In order to identify the trends in noisy data, we have to set the parameters algorithm is used. Learn more>>>

37. What is the difference between Momentum and Nesterov Momentum?

Ans: Momentum method is a technique that can speed up gradient descent by taking accounts of previous gradients in the update rule at every iteration. where v is the velocity term, the direction and speed at which the parameter should be twisted and α is the decaying hyper-parameter. Learn more>>>

38. Explain about Adam Optimization Function?

Ans: Adam can be looked at as a combination of RMSprop and Stochastic Gradient Descent with momentum. It uses the squared gradients to scale the learning rate like RMSprop and it takes advantage of momentum by using moving average of the gradient instead of gradient itself like SGD with momentum. Learn more>>>

39. What is the difference between Adagrad, Adadelta and Adam?

Ans: Adagrad scales alpha for each parameter according to the history of gradients (previous steps) for that parameter which is basically done by dividing current gradient in update rule by the sum of previous gradients. As a result, what happens is that when the gradient is very large, alpha is reduced and vice-versa. Learn more>>>

40. What are Local Minima and Global Minima in Gradient Descent?

Ans: The point in a curve which is minimum when compared to its preceding and succeeding points is called local minima. The point in a curve which is minimum when compared to all points in the curve is called Global Minima. Learn more>>>

41. How can you avoid local minima to achieve the minimized loss function?

Ans: We can try to prevent our loss function from getting stuck in a local minima by providing a momentum value. So, it provides a basic impulse to the loss function in a specific direction and helps the function avoid narrow or small local minima. Learn more>>>

42. What is Learning rate and how can it effect accuracy and performance in Neural Networks?

Ans: A neural network learns or approaches a function to best map inputs to outputs from examples in the training dataset. Precisely, it controls the amount of assigned error that the weights of the model are updated with each time they are updated. Learn more>>>

43. What might me the reasons of the Neural Network model is unable to decrease loss during training period?

Ans: Large Learning rate will cause the optimization to diverge, small learning rate will prevent you from making real improvement, and possibly allow the noise inherent in SGD to overcome your gradient estimates. Scheduling the Learning rate can decrease the learning rate over the course of training. Learn more>>>

44. What is Convolutional Neural Network? What are all the layers used in it?

Ans: A Convolutional Neural Network is a Deep Learning algorithm which can take image as an input, assign weights and biases to various objects in the image and be able to differentiate one from the other. The pre-processing required in a ConvNet is much lower as compared to other classification algorithms. Learn more>>>

45. What do you mean by filtering, stride and padding in Convolutional Neural Network?

Ans: A filter is represented by a vector of weights which we convolve the input. Every network layer act like a filter for the presence of specific features or patterns which are present in the original image. For detection of a filter it is inappropriate where exactly this specific feature or pattern. Learn more>>>

46. Explain concepts of Pooling in Convolutional Neural Network?

Ans: A pooling layer is one of the building blocks of a Convolutional Neural Network. Its function is to gradually reduce the spatial size of the representation to reduce the number of parameters and speed of the computation in the network. Learn more>>>

47. What do you mean by Capsule Neural Network?

Ans: Capsule is a nested set of neural layers. In a regular neural network, you keep on adding more layers where as in Capsule Neural Network you would add more layers inside a single layer. In other words, nest a neural layer inside another layer. Learn more>>>

48. What is the difference between Convolutional Neural Network and Capsule Neural Network?

Ans: Convolutional Neural Network is used for the Image recognition by using different layers. Convolutional layer comprises set of independent filters where each filter is convolved with image. Pooling layer is used to reduce the spatial size of representation to reduce the number. Learn more>>>

49. Why we use only Convolutional Neural Network for the Image recognition?

Ans. Convolutional Neural Network is developed from the inspiration of the visual cortex in Human Brain. CNN are the complex feed forward Neural Network which is used for Image recognition for its high accuracy. The CNN follows a hierarchical model which works on building. Learn more>>>

50. What are different hyperparameters use in Convolutional Neural Networks during training model?

Ans: Tuning hyperparameters for deep neural network is difficult because it is slow to train a deep neural network and there are many parameters to configure. Learning rate controls updating of the weight in the optimization algorithm. By fixing the learning rate, gradually decreasing learning rate, momentum-based methods. Learn more>>>

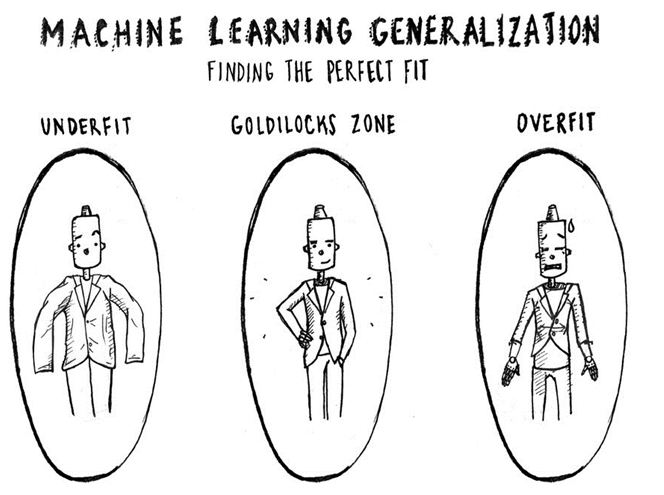

51. How can you understand under fitting and over fitting in Neural Network?

Ans: A model is said to have underfitting when it cannot capture the underlying trend of the data. Underfitting destroys the accuracy of model. Its occurrence simply means that our model not fit the data well enough. It usually happens when we have less data to build an accurate. Learn more>>>

52. What is the Regularization techniques used in Neural Network?

Ans: Regularization is a technique which makes slight modifications to the learning algorithm such that the model generalizes better. This in turn improves the model’s performance on the unseen data as well. In deep learning, regularization penalizes the weight matrices of the nodes. Learn more>>>

53. What is meant by Data Augmentation? What are its uses?

Ans: Data augmentation adds value to base data by adding information derived from internal and external sources within an original data. Data augmentation can be applied to any form of data, but may be especially useful for customer data, sales patterns, product sales, where additional information can help provide more in-depth insight. Learn more>>>

54. When do we apply early stopping and how it is helpful?

Ans: Early Stopping is a type of regularization technique. During training, the model is evaluated on a holdout validation dataset after each epoch. If the performance of the model on the validation dataset starts to degrade, then the training process is stopped. The model after training is stopped is used and is known to have good generalization performance. Learn more>>>

55. Explain Recurrent Neural Network?

Ans: Recurrent Neural Network are a type of Neural Network where the output from previous step are fed as input to the current step. In traditional neural networks, all the inputs and outputs are independent to each other, but when it is required to predict the next word of a sentence, the previous words are required and hence there is a requirement. Learn more>>>

56. What is the difference between one to one, one to many, many to one, many to many in Neural Network?

Ans: In a One-To-Many relationship, one object is the “parent” and other is the “child”. The parent controls the presence of the child. In a Many-To-Many relationship, the existence of either type is dependent on something outside the both of them. Learn more>>>

57. What is the difference between Bidirectional RNN and RNN?

Ans: Bidirectional Recurrent Neural Networks (BRNN) means connecting two hidden layers of opposite directions to the same output, With this form of generative deep learning, the output layer can get information from past and future states at the same time. Learn more>>>

58. What are the different ways of solving Gradient issues in RNN?

Ans: The lower the gradient is, the harder it is for the network to update the weights and the longer it takes to get to the final result. The output of the earlier layers is used as the input for the further layers. The training for the time point t is based on inputs that are coming from untrained layers. Learn more>>>

59. What is LSTM and Explain different types of gates used in LSTM?

Ans: Long Short Term Memory networks – usually just called “LSTMs” – are a special kind of RNN, capable of learning long-term dependencies. They work tremendously well on a large variety of problems, and are now widely used. Learn more>>>

60.Explain about Auto Encoder? Details about Encoder, Decoder and Bottleneck?

Ans: An autoencoder is a neural network that has three layers an input layer, a hidden layer or encoding layer, and a decoding layer. Auto Encoders are type of basic Machine Learning Model in which output is same as the input. It belongs to the family of Neural Network where it works by compressing the input into the Latent space. Learn more>>>

61. What are the different components in Autoencoders?

Ans: An Autoencoder consists of three components namely Encoder is also called as Input layer in Autoencoder. It encodes the input image and sends it into code layer or Bottleneck layer of Autoencoder. Learn more>>>

62. Explain about Under complete Autoencoder?

Ans: Under complete Autoencoder is a type of Autoencoder. Its goal is to capture the important features present in the data. It has a small hidden layer hen compared to Input Layer. This Autoencoder do not need any regularization as they maximize the probability of data rather copying the input to output. Learn more>>>

63. Explain about Sparse Autoencoder?

Ans: In sparse autoencoders with a sparsity enforcer that directs a single layer network to learn code dictionary which minimizes the error in reproducing the input while constraining number of code words for reconstruction. Learn more>>>

64. Explain about Denoising Autoencoder?

Ans: Generally, Autoencoders are used for Feature Selection and Feature Extraction. When the number of Hidden Layers is more than Input layers, then the output is equal to Input. To rectify this problem, we use Denoising Autoencoder. Learn more>>>

65. Explain about Convolutional autoencoder?

Ans: Convolutional Autoencoders use the convolution operator to exploit this observation. Rather than manually engineer convolutional filters we let the model learn the optimal filters that minimize the reconstruction error. These filters can then be used in any computer vision task. Learn more>>>

66. Explain about the Contractive autoencoders?

Ans: A contractive autoencoder is an unsupervised deep learning technique that helps a neural network encode unlabeled training data. Contractive autoencoder (CAE) objective is to have a robust learned representation which is less sensitive to small variation in the data. Learn more>>>

67. Explain about Deep autoencoders?

Ans: Deep Autoencoders consist of two identical deep belief networks. One network for encoding and another for decoding. Typically, deep autoencoders have 4 to 5 layers for encoding and the next 4 to 5 layers for decoding. We use unsupervised layer by layer pre-training. The layers are restricted Boltzmann machines, which are the building blocks of deep-belief networks. Learn more>>>

68. Explain the terms Latent space representation?

Ans: Latent Space Representation is the compressed representation of an Image. In Auto Encoders, the Image is compressed and represented in the form of Latent Space Representation. Later by using Auto Decoder, one can get extended form of Image from Latent Space Representation. In Auto Encoding, Latent Space Representation acts as a layer which is in between Input layer and Output layer. Learn more>>>

69. What is Restricted Boltzmann Machine?

Ans: A Restricted Boltzmann machine is an algorithm useful for dimensionality reduction, classification, regression, collaborative filtering, feature learning and topic modeling. RBMs are shallow, two-layer neural nets that constitute the building blocks of deep-belief networks. The first layer of the RBM is called the visible, or input, layer, and the second is the hidden layer. Learn more>>>

70. How to perform Hyperparameter tuning in Autoencoders?

Ans: While training an Autoencoder, we will do hyperparameter tuning in order to obtain required output.Code size: It represents the number of nodes in the middle layer. Smaller size results in more compression.Number of layers: The autoencoder can consist of as many layers as we want. Learn more>>>

71. What are popular Deep Learning Libraries in the world?

Ans: Now a days Deep learning is evolving rapidly. The most popular Deep learning Libraries according to 2019 are It’s handy for creating and experimenting with deep learning architectures, and its formulation is convenient for data integration such as inputting graphs, SQL tables, and images together. It is backed by Google which guarantees it will stay around for a while, hence it makes sense to invest time and resources to learn it. Learn more>>>

72. What do you mean by Transfer Learning? How do you apply in Neural Network?

Ans: Transfer learning is a machine learning method where a model developed for a task is reused as the starting point for a model on the other task.Transfer learning methods can be categorized based on the type of traditional ML algorithms involved, such as: Learn more>>>

73. What is the difference between Keras and TensorFlow?

Ans: TensorFlow is an open source platform for machine learning. It’s a comprehensive and flexible environment of tools, libraries and other resources that provide workflows with high-level APIs. The framework offers various levels of concepts for you to choose the one to build and deploy machine learning models. Learn more>>>

74. How do you save Neural Network model in keras once after the training and tuning?

Ans: Neural Networks can be saved in keras in two formats which are JSON and YAML formats.JSON is a simple file format for describing data hierarchically.Keras provides the ability to describe any model using JSON format with a to_json() function. This can be saved to file and later loaded via the model_from_json() function that will create a new model from the JSON specification. Learn more>>>

75. What are the different Pre-trained models available in keras?

Ans: It is not always possible to build a model from scratch due to lack of time or any other reasons. This is the time where Pre trained models are useful. A pre-trained model is a model that was trained on a large standard dataset to solve a problem which is similar to the one that we have to solve. . Learn more>>>

76. What do you mean by VGG16 model? And how do we use it for Image Classification?

Ans: VGG16 is a convolutional neural network model. The model achieves 92.7% top-5 test accuracy in ImageNet, which is a dataset of over 14 million images belonging to 1000 classes. The input to cov1 layer is of fixed size 224 x 224 RGB image. The image is passed through a stack of convolutional (conv.) layers, where the filters were used with a very small receptive field: 3×3 (which is the smallest size to capture the notion of left/right, up/down, center . Learn more>>>

77. Explain steps to solve Regression Problem using keras in Neural Network?

Ans: To solve regression problem, Neural Network will be built in Keras i.e. one where our dependent variable (y) is in interval format and we are trying to predict the quantity of y with as much accuracy as possible. Learn more>>>

78. Explain the Architecture of Keras Framework?

Ans: Keras is an Open Source Neural Network library written in Python that runs on top of Theano or TensorFlow. It is designed to be modular, fast and easy to use. Keras is high-level API wrapper for the low-level API, capable of running on top of TensorFlow, CNTK, or Theano. Learn more>>>

79. What do you mean by Mobile Net Model?

Ans: Mobile Net is an architecture which is more suitable for mobile and embedded based vision applications where there is lack of computation power. This architecture uses depth wise separable convolutions which significantly reduces the number of parameters when compared to the network. Learn more>>>

80. What do you mean by Google’s TensorFlow, Explain TensorFlow’s Architecture and its work?

Ans: The most famous deep learning library in the world is Google’s TensorFlow. Google product uses machine learning in all of its products to improve the search engine, translation, image captioning or recommendations. Learn more>>>

81. What do you mean by Tensor and Explain about Tensor Datatype and Ranks?

Ans: A tensor is often thought of as a generalized matrix. That is, it could be a 1-D matrix (a vector is actually such a tensor), a 3-D matrix (something like a cube of numbers), even a 0-D matrix (a single number), or a higher dimensional structure that is harder to visualize. The dimension of the tensor is called its rank. Learn more>>>

82. What do you mean by Tensorboard?

Ans: Tensorboard is the interface used to visualize the graph and other tools to understand, debug, and optimize the model. Tensorboard provides us with a suite of web applications that help us to inspect and understand the TensorFlow runs and graphs. Currently, it provides five types of visualizations: scalars, images, audio, histograms, and graphs. Learn more>>>

83. Elaborate about Constants, Variables, Place holders in TensorFlow?

Ans: TensorFlow supports three main type of data types namely Constants, Variables and Placeholders. Constant can be created using tf.constant() function.A constant has the following arguments which can be tweaked as required to get the desired function.value: A constant value (or list) of output type dtype. Learn more>>>

84. How do you build computational graph in TensorFlow?

Ans: TensorFlow uses a dataflow graph to represent your computation in terms of the dependencies between individual operations. This leads to a low-level programming model in which you first define the dataflow graph, then create a TensorFlow session to run parts of the graph across a set of local and remote devices. Learn more>>>

85. What is TensorFlow Pipelines and where it can be applied?

Ans: GPUs and TPUs can radically reduce the time required to execute a single training step. Achieving peak performance requires an efficient input pipeline that delivers data for the next step before the current step has finished. The tf.data API helps to build flexible and efficient input pipelines. Learn more>>>

86. How to implement Gradient Descent in TensorFlow?

Ans: Gradient Descent is a learning algorithm that attempts to minimize some error. Learn more>>>

87. How to implement Linear Classification model in TensorFlow?

Ans: The two most common supervised learning tasks are linear regression and linear classifier. Linear regression predicts a value while the linear classifier predicts a class. This tutorial is focused on Linear Classifier. Learn more>>>

88. What is computer vision?

Ans: Computer Vision, often abbreviated as CV, is defined as a field of study that seeks to develop techniques to help computers “see” and understand the content of digital images such as photographs and videos.Computer vision is closely linked with artificial intelligence, as the computer must interpret what it sees, and then perform appropriate analysis or act accordingly. Learn more>>>

89. How many types of image filters in OpenCV?

Ans: Filters generally use many pixel for computing each new pixel value but point operations can use one pixel to perform an image processing. The filters can use for blurred or fuzzy the local intensity of image to make it smooth. The idea is to replace every pixel by the average of its neighbor pixels. Learn more>>>

90. What are face recognition algorithms?

Ans: The face recognition algorithm is responsible for finding characteristics which best describe the image.The face recognition systems can operate basically in two modes: Verification or authentication of a facial image: it basically compares the input facial image. Learn more>>>

91. How can you do face detection in Open cv and what is the Difference between face detection and Recognition?

Ans: Face detection is a computer technology being used in a variety of applications that identifies human faces in digital images. Face-detection algorithms focus on the detection of frontal human faces. It is analogous to image detection in which the image of a person is matched bit by bit. Learn more>>>

92. How can you detect corner of images using OpenCV?

Ans: The purpose of detecting corners is to track things like motion, do 3D modeling, and recognize objects, shapes, and characters. Learn more>>>

93. What Is Geometric Transformation?

Ans: A geometric transformation is any bijection of a set having some geometric structure to itself or another such set. Specifically, “A geometric transformation is a function whose domain and range are sets of points. Most often the domain and range of a geometric transformation are both R2 or both R3. Learn more>>>

94. How can you do Object Detection video?

Ans: Object Detection in a Video can be done by using TensorFlow, OpenCV, Pillow, H5py we’ll be using a trained YOLOv3 computer vision model to perform the detection and recognition tasks. Learn more>>>

95. What are SIFT and SURF?

Ans: The scale-invariant feature transform (SIFT) is an algorithm used to detect and describe local features in digital images. It locates certain key points and then furnishes them with quantitative information (so-called descriptors) which can for example be used for object recognition. The descriptors are supposed to be invariant against various transformations which might make images look different although they represent the same object(s). Learn more>>>

96. What are the languages supported by Computer vision?

Ans: OpenCV and other image processing and computer vision libraries care about computational efficiency. Based on this, you could argue that the best programming language for image processing would be the most efficient and fastest language. That is, from the most popular languages your answer would be C++. Learn more>>>

97. What is SIFT? How to implement SIFT in Computer Vision?

Ans: The scale-invariant feature transform (SIFT) is an algorithm used to detect and describe local features in digital images. It locates certain key points and then furnishes them with quantitative information (so-called descriptors) which can for example be used for object recognition. The descriptors are supposed to be invariant against various transformations which might make images look different although they represent the same object(s). Learn more>>>

98. What is SURF? How to implement SURF in Computer Vision?

Ans: SURF is the speed up version of SIFT. In SIFT, Lowe approximated Laplacian of Gaussian with Difference of Gaussian for finding scale-space. SURF goes a little further and approximates LoG with Box Filter. One big advantage of this approximation is that, convolution with box filter can be easily calculated with the help of integral images. And it can be done in parallel for different scales. Learn more>>>

99. What is BRIEF? How to implement BRIEF in Computer Vision?

Ans: BRIEF means Binary Robust Independent Elementary Features. It provides a shortcut to find the binary strings directly without finding descriptors. It takes smoothened image patch and selects a set of (x,y) location pairs in an unique way (explained in paper). Learn more>>>

100. What is ORB? How to implement ORB in Computer Vision?

Ans: The Full form off ORB is Oriented Fast and Rotated BRIEF. ORB performs as well as SIFT on the task of feature detection (and is better than SURF) while being almost two orders of magnitude faster. ORB builds on the well-known FAST keypoint detector and the BRIEF descriptor. Both of these techniques are attractive because of their good performance and low cost. ORB’s main contributions are as follows: Learn more>>>

101. What do you mean by Haar cascades in Computer Vision? What are the different Haar Cascades available in Computer Vision?

Ans: A Haar-like feature considers neighboring rectangular regions at a specific location in a detection window, sums up the pixel intensities in each region and calculates the difference between these sums. This difference is then used to categorize subsections of an image. Learn more>>>

102. How do you implement Image Translation in Computer Vision?

Ans: Image-to-image translation is a class of vision and graphics problems where the goal is to learn the mapping between an input image and an output image. It can be applied to a wide range of applications, such as collection style transfer, object transfiguration, season transfer and photo enhancement. Learn more>>>

103. What do you mean by Eigen Faces and Explain in Detail?

Ans: Eigenface Method uses Principal component analysis to linearly project the image space to low dimensional feature space.As correlation methods are computationally expensive and require great amounts of storage, it is natural to pursue dimensionality reduction schemes. Learn more>>>

104. What do you mean by Fisher Faces and Explain in Detail?

Ans: Fisher faces method learns a class specific transformation matrix, so, they do not capture illustrations as obviously as the Eigenfaces method.Discriminant analysis instead finds the facial feature to discriminate between the persons. It’s important to mention that the performance of the Fisher faces heavily depends upon input data as well. Learn more>>>

105. How can you implement Averaging Technique on Image in Computer Vision?

Ans: In the averaging method, it is assumed that you have several images of the same object… each with a different “noise pattern”. This is exactly what can be easily obtained when taking pictures of distant galaxies. The gigantic telescopes just keep looking at the same object. Learn more>>>

106. Explain about Gaussian Filtering?

Ans: Gaussian filters are ideal to start experimenting with filtering because their design can be controlled by manipulating just one variable- the variance.Gaussian filter function is defined as. Learn more>>>

107. Explain in detail about Median Filtering?

Ans: Median filtering is a nonlinear method used to remove noise from images. It is widely used as it is very effective at removing noise while preserving edges. It is particularly effective at removing ‘salt and pepper’ type noise. The median filter works by moving through the image pixel by pixel, replacing each value with the median value of neighboring pixels. Learn more>>>

108. Explain in detail about Bilateral Filtering?

Ans: The bilateral filter is technique to smooth images while preserving edges. Its formulation is simple: each pixel is replaced by an average of its neighbors. This aspect is important because it makes it easy to acquire intuition about its behavior, to adapt it to application-specific requirements, and to implement it. Learn more>>>

109. How can we do Thresholding in Computer Vision using OpenCV?

Ans: Thresholding is the assignment of each pixel in an image to either a true or false class based on the pixel’s value, location or both. The result of a thresholding operation is typically a binary image in which each pixel is assigned either a true or false value. Learn more>>>