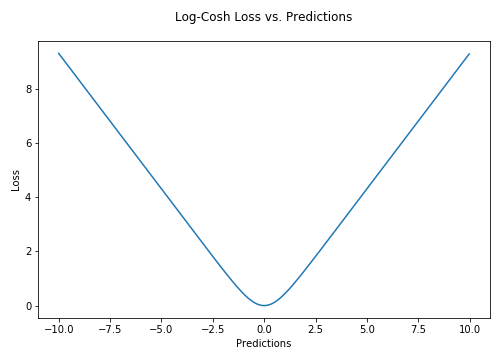

What is difference between MSE and MAE?

The mean absolute error (MAE) is a quantity used to measure how close predictions are to the outcomes. The mean absolute error is an average of the all absolute errors. The mean absolute error is a common measure of estimate error in time series analysis. The mean squared error of an estimator measures the average of the squares of the errors, which means the difference between the estimator and estimated.

The mean absolute error (MAE) is a quantity used to measure how close predictions are to the outcomes. The mean absolute error is an average of the all absolute errors. The mean absolute error is a common measure of estimate error in time series analysis. The mean squared error of an estimator measures the average of the squares of the errors, which means the difference between the estimator and estimated.

MSE is a function, equivalent to the expected value of the squared error loss or quadratic loss. The difference occurs because of the randomness. The MSE is a measure of the quality of an estimator, it is always positive, and values which are closer to zero are better. The MSE is the second moment of the error, and includes both the variance of the estimator and its bias. For an unbiased estimator, the MSE is the variance of the estimator.