What are different types of neural networks?

Ans: A Neural Network is a network of neurons which are interconnected to accomplish a task. Artificial Neural Networks are developed by taking the reference of Human brain system consisting of Neurons. In Artificial Neural Networks perceptron are made which resemble neuron in Human Nervous System.

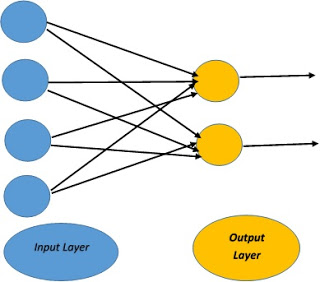

(a)Feed Forward Neural Network:

In a feed forward neural network, the data passes through the different input nodes till it reaches the output node.

This is also known as a front propagated wave which is usually achieved by using a classifying activation function. this type of neural network can have either single layer or hidden layers.

In a feed forward neural network, the sum of the products of the inputs and their weights are calculated. This is then fed to the output.

(b) Radial Basis Function Neural Network:

A radial basis function considers the distance of any point relative to the center. Such neural networks have two layers. In the inner layer, the features are combined with the radial basis function.

Then the output of these features is taken into account when calculating the same output in the next time-step.

These types of neural networks are used in the power restoration systems in order to restore power in the shortest possible time.

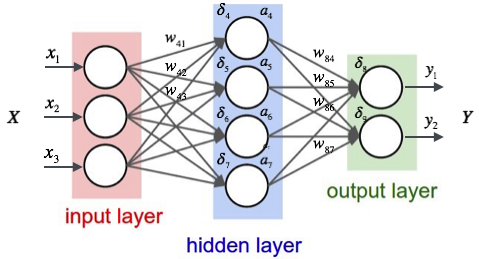

(c) Multilayer Perceptron:

A multilayer perceptron has three or more layers. It is used to classify data that cannot be separated linearly. It is fully or completely connected Neural Network. Every single node in this layer is connected to each node in the following layer of Neural Network.

A multilayer perceptron uses a nonlinear activation function.

(d) Convolutional Neural Network:

Convolutional Networks are similar to that of Feed Forward Neural Network where the Perceptron have learnable Weights and Bias. Computer Vision techniques are dominated with Convolutional Neural Network because of its accuracy in Image Classifications.

This will help the Network to remember the parts of Images while computing the operations. These computations involve in the operation of conversion of RBG scale, HSI scale to Gray scale.

Its application has been Signal and Image Processing which takes over Open CV in field of Computer Vision.

(e) Recurrent Neural Network:

Recurrent Neural networks feed the Output of the layer to Input in order to predict the Outcome of the layer. In this Neural Network, Feedback Lops are possible. Recurrent Neural Networks are having been less influential when compared to Feed Forward Neural Networks.

Each Neuron cell acts like Memory cell while computing. If we get wrong prediction, then we use the learning rate or error correction to make small changes. So that it will gradually work to give the right prediction during the propagation.

Application of Recurrent Neural Networks found in Text to Speech conversion models.

(f) Modular Neural Network:

Modulating Neural Networks are collection of different Neural Networks which are independent, contribute to the single Output. Each Neural Networks have separate unique set of inputs. These Networks does not interact while accomplishing the tasks. Its advantage is it reduces the complexity by splitting the large computational process into small components. Processing Time depends upon the number of neurons and their involvement in computing the results.

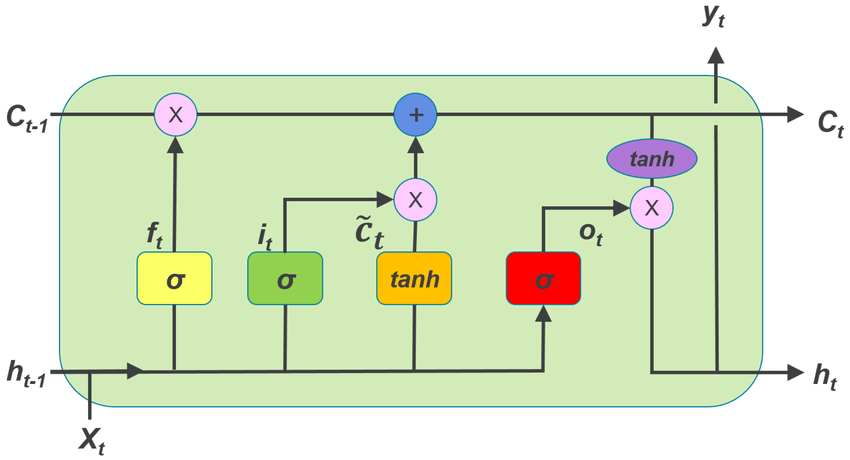

(g) Long Short Term Memory:

Long Short-Term Memory (LSTM) is a specific type of recurrent neural network (RNN) that was designed to model sequences of happenings with respect to time and their long-range dependencies more accurately than conventional RNNs. LSTM does not use activation function within its recurrent components, no modified stored values, and the gradient does not vanish during training. LSTM units are implemented in “blocks” with more units. These blocks have three or four “gates” that control information flow drawing on the logistic function.

(h) Sequence-to-sequence models:

A sequence-to-sequence model is a combination of two recurrent neural networks encoder and decoder. An encoder processes the input and a decoder produces the output. Encoder and decoder use the same or different sets of parameters.

These types of models are mainly used in question answering systems, chatbots, and machine translation. Multi-layer cells are used in sequence-to-sequence models for performing translations successfully.