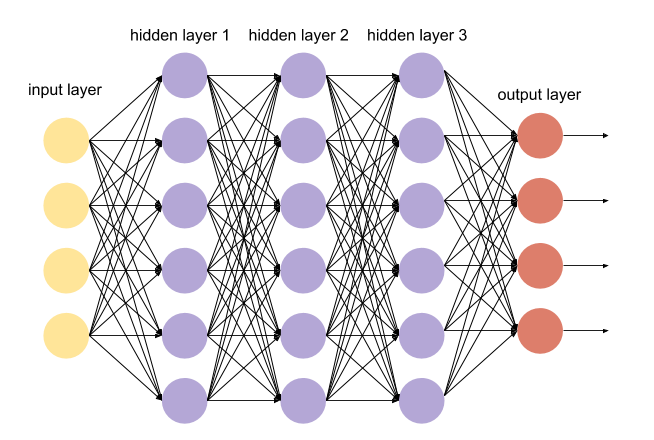

What are the different functions that we use in Keras to build basic Neural Network?

Ans: Different Activation functions are used in Keras in order to build Neural Network. ReLU (Rectified linear unit) is used in first two layers. Sigmoid Function is used at Output layer. We use a sigmoid on the output layer to ensure our network output is between 0 and 1. Tanh function can be used at every layer.

Share: