What do you mean by Law of transformation of Skewed Variables?

Different features in the data set may have values in different ranges. For example, in an employee data set, the range of salary feature may lie from thousands to lakhs but the range of values of age feature will be in 20- 60. That means a column is more weighted compared to other. Data transformation mainly deals with normalizing also known as scaling data, handling skewness and aggregation of attributes.

Normalization

Normalization or scaling refers to fetching all the columns into same range

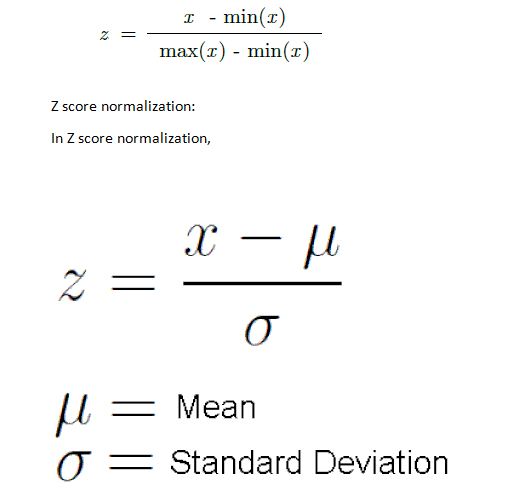

Min-Max

Z score

Min-Max normalization:

It is simple way of scaling values in a column. It tries to move the values towards the mean of the column.

Min- Max tries to get the values closer to mean. But when there are outliers in the data which are important and we don’t want to lose their impact, we go with Z score normalization.

Transformation Techniques of Skewed Data are:

Cube root transformation:

The cube root transformation includes converting x to x^(1/3). This is a strong transformation with a substantial effect on distribution shape: but is weaker than the logarithm. It can be applied to negative and zero values too.

Square root transformation:

Applied to positive values only. Hence, observe the values of column before applying.

Logarithm transformation:

The logarithm, x to log base 10 of x, or x to log base e of x (ln x), or x to log base 2 of x, is a strong transformation and can be used to reduce right skewness.