What is TensorFlow Pipelines and where it can be applied?

GPUs and TPUs can radically reduce the time required to execute a single training step. Achieving peak performance requires an efficient input pipeline that delivers data for the next step before the current step has finished. The tf.data API helps to build flexible and efficient input pipelines.

Input Pipeline Structure

A typical TensorFlow training input pipeline can be framed as an ETL process:

Extract: Read data from persistent storage — either local (e.g. HDD or SSD) or remote (e.g. GCS or HDFS).

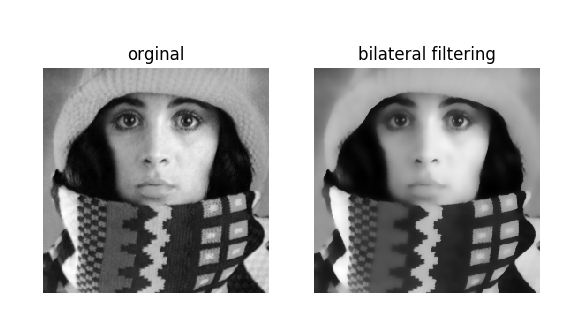

Transform: Use CPU cores to parse and perform preprocessing operations on the data such as image decompression, data augmentation transformations (such as random crop, flips, and color distortions), shuffling, and batching.

Load: Load the transformed data onto the accelerator device(s) (for example, GPU(s) or TPU(s)) that execute the machine learning model.

This pattern effectively utilizes the CPU, while reserving the accelerator for the heavy lifting of training your model. In addition, viewing input pipelines as an ETL process provides structure that facilitates the application of performance optimizations.

When using the tf.estimator.Estimator API, the first two phases (Extract and Transform) are captured in the input_fn passed to tf.estimator.Estimator.train.

Pipelining overlaps the preprocessing and model execution of a training step. While the accelerator is performing training step N, the CPU is preparing the data for step N+1. Doing so reduces the step time to the maximum (as opposed to the sum) of the training and the time it takes to extract and transform the data.

Without pipelining, the CPU and the GPU/TPU sit idle much of the time.

With pipelining, idle time diminishes significantly.

The tf.data API provides a software pipelining mechanism through the tf.data.Dataset.prefetch transformation, which can be used to decouple the time data is produced from the time it is consumed. In particular, the transformation uses a background thread and an internal buffer to prefetch elements from the input dataset ahead of the time they are requested. Thus, to achieve the pipelining effect illustrated above, you can add prefetch(1) as the final transformation to your dataset pipeline (or prefetch(n) if a single training step consumes n elements.