When do we apply early stopping and how it is helpful?

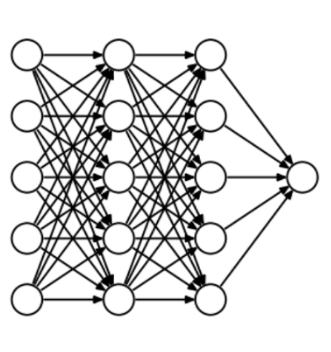

Ans: Early Stopping is a type of regularization technique. During training, the model is evaluated on a holdout validation dataset after each epoch. If the performance of the model on the validation dataset starts to degrade, then the training process is stopped. The model after training is stopped is used and is known to have good generalization performance.

This process is called “early stopping” and is one of the oldest and most widely used forms of neural network regularization.

If regularization methods like weight decay that update the loss function to encourage fewer complex models are considered as explicit regularization, then early stopping may be thought of as a type of implicit regularization, it is like using a smaller network that has less capacity. Early stopping requires configuration of your network which needs to be under constrained, which means that it has more capacity than is required for the problem.

When training the network, a larger number of training epochs is used than normal are required, to give the network plenty of opportunity to fit, then it begins to overfit the training dataset.

There are three elements to using early stopping.

- Monitoring model performance.

- Trigger to stop training.

- The choice of model to use.

Early stopping rules provide guidance for number of iterations which can be run before the learner begins to over-fit. Early stopping rules have been active in many different machine learning methods, with varying amounts of theoretical foundation.