Why GPUs are more suited for Deep Learning?

In the era, where Artificial Intelligence is taking the baby steps towards tremendously impacting the world with its ability to attain the never thought of tasks, the detailed knowledge of resources used can highly affect the execution.

GPU(Graphics Processing Unit) is the heart of Deep Learning, a part of Artificial Intelligence. GPUs have improved year after year and now they are capable of doing some incredibly great stuff, but in the past few years, they are catching even more attention due to deep learning.

As deep learning models spend a large amount of time in training, even powerful CPUs weren’t efficient enough to handle so many computations at a given time and this is the area where GPUs just outperformed CPUs due to its parallelism.

What is the GPU?

A GPU (Graphics Processing Unit) is a specific processor with dedicated memory that predictably perform floating point operations required for rendering graphics.

It is a single chip processor used for wide Graphical and Mathematical computations which frees up CPU cycles for other jobs.

Furthermore, GPUs also process complex geometry, vectors, light sources or illuminations, textures, shapes, etc. As now we have a basic idea about GPU, let us understand why it is deeply used for deep learning.

The Outstanding Characteristics between a CPU and GPU are:

- CPUs have few complex cores which run processes serially with few threads at a time while, GPUs have large number of simple cores which let parallel computing through thousands of threads computing at a time.

- In deep learning, the host code runs on CPU but CUDA code runs on GPU.

- CPU allocates the complex tasks like 3D Graphics Rendering, vector computations, etc. to GPU.

- While CPU can carry out optimized and long complex tasks, transferring large amount of data to the GPU might be slow.

- GPUs are bandwidth optimized. CPUs are latency(memory access time) optimized.

Why choose GPUs for Deep Learning?

One of the most admired characteristics of a GPU is the ability to compute processes in parallel. This is the point where the concept of parallel computing kicks in. A CPU in general completes its task in a sequential manner. A CPU can be divided into cores and each core takes up one task at a time. Suppose if a CPU has 2 cores. Then two different task’s processes can run on these two cores thereby achieving multitasking. But still, these processes execute in a serial fashion.

They have a large number of cores, which allows for better computation of multiple parallel processes. Additionally, computations in deep learning handles huge amounts of data — this makes a GPU’s memory bandwidth most appropriate.

Following are a few Deciding Parameters that helps in whether to use a CPU or a GPU to train our model:

Memory Bandwidth:

The CPU takes up a lot of memory while training the model due to large datasets. The standalone GPU, on the other side, comes with a dedicated VRAM memory. Thus, the CPU’s memory can be used for other tasks.

The best CPUs have about 50GB/s while the simplest GPUs have 750GB/s memory bandwidth.

Dataset Size

Training a model in deep learning requires a huge dataset, hence the massive computational operations in terms of memory. To compute the data efficiently, a GPU is an excellent choice. The higher the computations, the more the benefit of a GPU over a CPU.

Optimization

Optimizing tasks are far easier in CPU. CPU cores are more powerful than thousands of GPU cores though they are few.

Each CPU core can perform on different instructions whereas, GPU cores, who are usually organized within the blocks of 32 cores, execute the same instruction at a given time in parallel.

The parallelization in dense neural networks is highly difficult given the effort it needs. Hence, complex optimization methods are difficult to implement in a GPU than in a CPU.

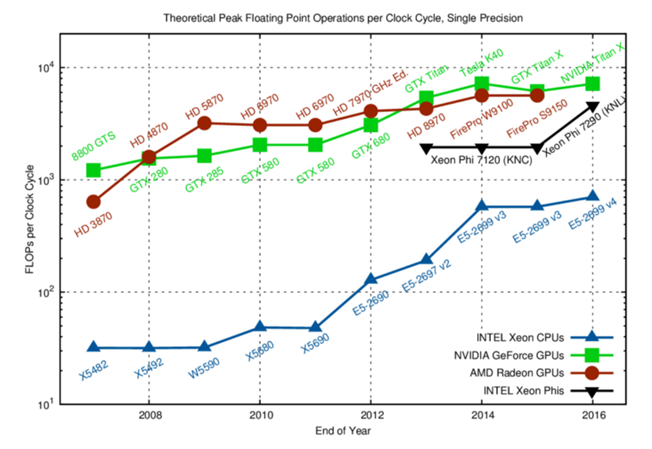

Above chart shows that GPUs (red/green) can theoretically do 10–15x the operations of CPUs (in blue). This speedup highly applied in practice too.

As a general rule, GPUs are best for fast machine learning because, at its heart, data science model training consists of simple matrix math calculations, the speed of which may be greatly boosted if the computations are passed out in parallel.

CPUs are good at handling single, more complex calculations serially, while GPUs are better at handling multiple but simpler calculations in parallel.

The scope of GPUs in upcoming years is huge as we make new innovations and breakthroughs in deep learning, machine learning, and HPC. GPU acceleration will always come in handy for many developers and students to get into this field as their prices are also becoming more affordable